OpenAI has officially introduced Prism, a dedicated scientific workspace program that signals a strategic pivot from generalized large language models (LLMs) to highly specialized, domain-specific research infrastructure. Launched this week, Prism is available immediately and free of charge to all existing ChatGPT account holders, positioning it as an accessible, AI-enhanced word processor and comprehensive research tool built explicitly for the rigorous demands of scientific authorship and discovery. At its core, Prism is fundamentally integrated with the advanced capabilities of GPT-5.2, leveraging the model’s robust reasoning engine to perform crucial functions such as assessing complex experimental claims, refining technical prose for clarity and precision, and conducting swift, contextual searches for prior foundational research.

While the ambition of Prism is vast, OpenAI executives have been clear regarding its role: it is designed to be an accelerator for human ingenuity, not a substitute for autonomous research. The platform seeks to automate the repetitive, high-friction tasks that consume vast amounts of a scientist’s time, freeing them to concentrate on experimental design and critical theoretical analysis. This philosophy mirrors the disruptive impact observed in software development, where sophisticated AI coding assistants have dramatically increased developer velocity. Kevin Weill, Vice President of OpenAI for Science, articulated this parallel in a recent briefing, stating an audacious forecast: "I think 2026 will be for AI and science what 2025 was for AI and software engineering." This prediction suggests that the scientific community is on the cusp of a productivity explosion equivalent to the rapid scaling seen in the software development lifecycle over the preceding year.

The Contextual Engine: Bridging the Gap Between LLMs and Scientific Rigor

The development of Prism is a direct response to the overwhelming, yet unstructured, demand for AI assistance within the hard sciences. OpenAI reports that its consumer-facing LLM, ChatGPT, processes an average of 8.4 million messages weekly concerning advanced scientific topics. While this figure highlights a strong latent need for AI tools in research, the utility of a generalized LLM for professional scientific work is inherently limited by its lack of deep contextual awareness.

Prism solves this fundamental limitation through what is arguably its most powerful innovation: rigorous, persistent context management. When a user engages the integrated ChatGPT window within the Prism environment, the underlying GPT-5.2 model gains immediate access to the full corpus of the user’s active research project, including methodologies, preliminary results, cited literature, and emerging hypotheses.

In a traditional interaction with a generalized LLM, a researcher must painstakingly copy-paste segments, define parameters, and continually remind the model of the project’s scope—a process that is inefficient and prone to errors. Prism eliminates this friction, allowing the AI’s responses to be both more intelligent and significantly more germane to the specific research problem at hand. This level of seamless workflow integration, as Weill noted, is precisely what made AI tools so transformative in software engineering, where integrated development environments (IDEs) equipped with AI copilot features revolutionized coding productivity. Prism aims to be the equivalent IDE for the scientific researcher.

Technical Deep Dive: Addressing Academic Pain Points

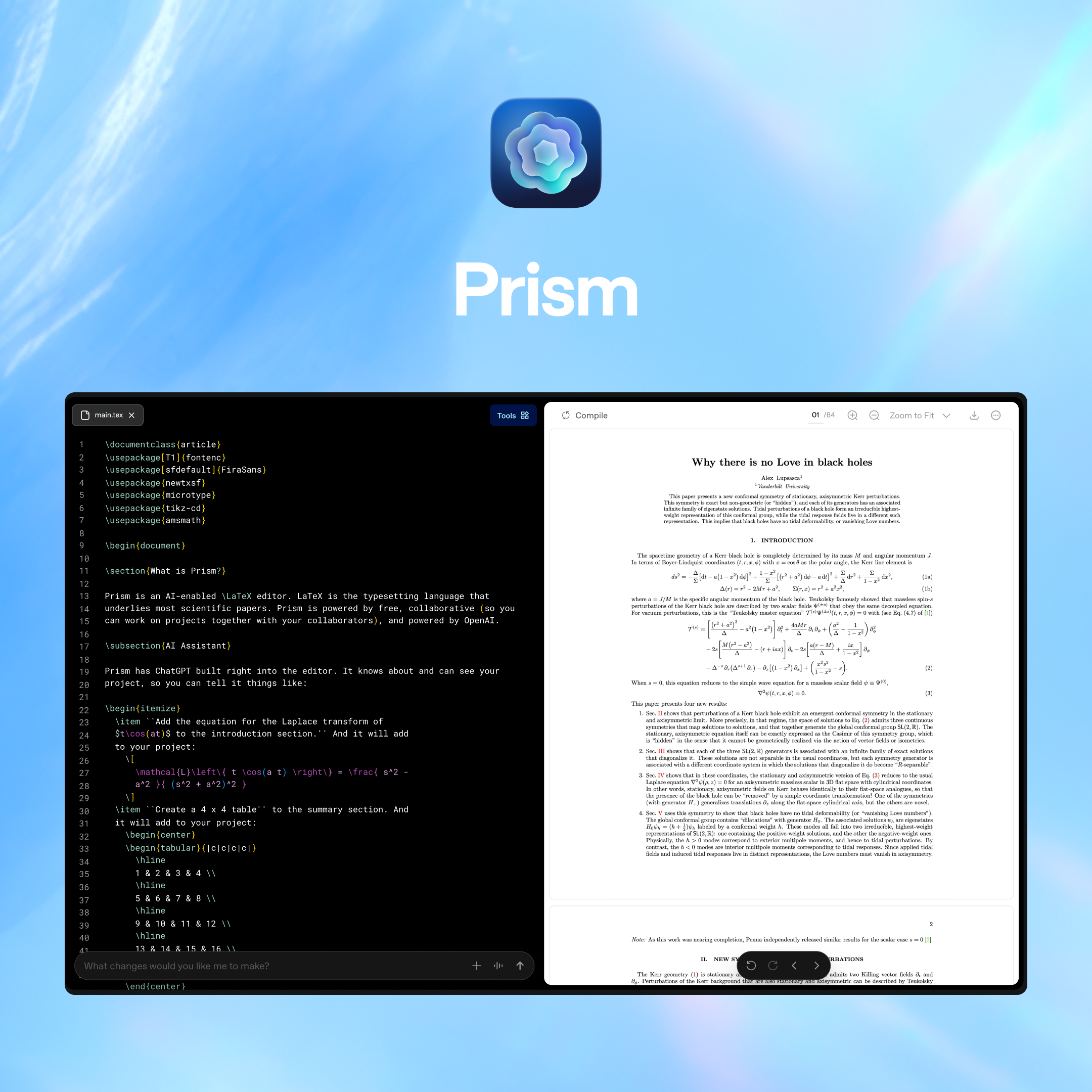

The value proposition of Prism extends beyond just enhanced reasoning; it incorporates targeted product features designed to alleviate the specific infrastructural pain points endemic to academic publishing. Foremost among these is the deep integration with LaTeX, the open-source document preparation system that remains the standard for formatting and typesetting complex scientific papers, especially those heavy in mathematical notation, physics, or computer science.

While LaTeX is indispensable for its precision, the learning curve and sheer complexity of managing large documents within existing LaTeX software often constitute a significant barrier to efficiency. Prism seeks to go beyond merely providing a compatible editor; it allows the researcher to leverage GPT-5.2 to manage the structural and formatting complexities of LaTeX code, automate citation insertion and bibliography generation according to specific journal styles, and ensure compliance with typesetting standards—tasks that are notoriously time-consuming and error-prone for human authors.

Furthermore, Prism addresses a critical bottleneck in scientific communication: the creation and iteration of complex visual aids. The platform harnesses GPT-5.2’s increasingly sophisticated visual capabilities, enabling researchers to quickly assemble professional-grade diagrams and schematics directly from rough online whiteboard sketches or even natural language descriptions. Generating publication-ready figures—a process that often requires specialized graphic design skills or cumbersome external software—becomes a task that can be managed intuitively within the research environment itself. This integration represents a significant step forward, reducing the turnaround time between data analysis and manuscript completion.

Background Context: The Ascent of AI in Formal Verification

The introduction of a tool like Prism occurs against a backdrop of increasing—and sometimes controversial—success for AI models in generating and verifying novel scientific insights. The use of advanced LLMs and specialized AI systems is rapidly moving from simple literature review to generating rigorous, formally verifiable proofs.

In the field of pure mathematics, for instance, AI models have recently achieved breakthroughs by cracking several long-standing problems posed by the influential mathematician Paul Erdős. These successes were not achieved through brute-force calculation alone, but through a combination of exhaustive, instantaneous literature review and the application of existing mathematical techniques in novel, non-obvious combinations. While the philosophical significance of these AI-generated proofs continues to fuel lively debate within the mathematics community—centered on whether a human-comprehensible intuition is still required—the results stand as undeniable early victories for proponents of AI augmentation and formal verification systems.

A key precedent cited by OpenAI in promoting Prism involves a December publication in statistics. That paper utilized GPT 5.2 Pro to establish new, foundational proofs for a central axiom of statistical theory. Crucially, the human researchers in this collaboration acted primarily as prompt engineers and verifiers, guiding the model’s exploration and confirming the validity of its output, rather than conducting the exhaustive theoretical legwork themselves. OpenAI highlighted this result in a dedicated blog post, arguing that it provided a blueprint for the future of human-AI research synergy.

The post emphasized that “In domains with axiomatic theoretical foundations, frontier models can help explore proofs, test hypotheses, and identify connections that might otherwise take substantial human effort to uncover.” This principle—that AI excels at exploring dense, theoretically structured spaces—is the underlying mandate for Prism. It suggests that the platform will not just help researchers write better, but help them think more expansively by instantly testing the theoretical limits of their hypotheses.

Industry Implications and Market Strategy

Prism represents a major strategic move by OpenAI to solidify its foothold in the lucrative and mission-critical domain of academic and industrial research. By offering the tool free to ChatGPT users, OpenAI is executing a classic “land and expand” strategy, aiming to rapidly integrate its ecosystem into the daily lives of millions of researchers globally.

The industry implications are profound. Firstly, Prism elevates the competitive stakes against rival AI powerhouses, notably Google DeepMind and Meta AI, both of which have made significant investments in scientific discovery tools (e.g., DeepMind’s work on protein folding with AlphaFold, or Meta’s focus on mathematical verification). While competitors focus on highly specialized models for specific scientific tasks, OpenAI is strategically claiming ownership of the overarching workflow and the publishing pipeline.

Secondly, the launch of Prism has implications for the scientific publishing industry. If a tool can drastically accelerate the drafting, revision, and formatting of manuscripts while simultaneously vetting claims against global literature, it places pressure on journals to adapt their submission processes and potentially accelerate the peer review cycle. The increased volume and quality of AI-assisted submissions could necessitate new standards for transparency regarding AI usage and verification.

Furthermore, Prism is a sophisticated method of driving high-value enterprise adoption of GPT-5.2. Scientific researchers, particularly those in corporate labs or government institutions, represent an elite user group whose data inputs and feedback are invaluable for refining future iterations of the LLM, especially in complex, low-resource domains. By making the scientific workflow contingent on the latest GPT model, OpenAI ensures deep, continuous engagement from a key demographic.

Expert Analysis: The Acceleration Thesis and Ethical Considerations

Kevin Weill’s assertion that 2026 will mark an inflection point for AI in science carries significant weight, but it also prompts necessary scrutiny regarding the challenges inherent in accelerating discovery.

One primary concern revolves around the potential for "AI dependency." If researchers become overly reliant on Prism to conduct literature reviews or assess claims, there is a risk of diminished critical thinking skills or a failure to spot subtle, novel errors that the model might overlook. Since the platform is designed for human verification, the scientific community must grapple with defining the appropriate balance between AI-driven discovery and independent human oversight.

A second, more technical challenge lies in managing the persistent risk of LLM hallucination within a context that demands absolute factual accuracy. While GPT-5.2 is designed to be highly reliable, the consequences of a fabricated citation or a flawed mathematical proof slipping through the human verification net could be severe. Prism’s success hinges on its ability to provide traceable, verifiable sources for every claim assessment and literature retrieval—a feature that must be rigorously maintained to uphold scientific integrity.

The concept of human-AI collaboration in formal verification is rapidly maturing. As demonstrated by the statistical proofs achieved with GPT 5.2 Pro, the optimal partnership involves the AI handling the combinatorial complexity of proof-space exploration, while the human provides the initial axiomatic framework and the final, authoritative sign-off. Prism is essentially productizing this relationship, making the interface between theoretical exploration and human judgment seamless and efficient.

Future Impact and Trends

The launch of Prism is merely the starting gun in the race to automate and augment scientific discovery. Looking forward, several trends are likely to emerge, driven by this new class of integrated AI tools:

- Hyper-Accelerated Peer Review: Future versions of Prism, or similar competing tools, will likely integrate AI-powered peer review features, allowing researchers to subject their drafts to instant, rigorous checks for methodological soundness, statistical errors, and novelty against the global literature before official submission. This could drastically reduce the time taken for initial editorial screening.

- Personalized Research Environments (PRISM 2.0): As LLMs become more specialized, future iterations will likely allow researchers to fine-tune their workspace based on sub-discipline (e.g., computational physics vs. molecular biology), integrating specific domain knowledge bases and proprietary simulation software directly into the Prism interface.

- The Rise of Formal Scientific Language Models (FSLMs): The continuous high-quality data input generated by Prism users, particularly concerning formal proofs and structured data, could lead OpenAI and its rivals to train specialized LLMs exclusively on formally verified scientific texts. These FSLMs would exhibit significantly reduced hallucination rates and deeper domain expertise than generalized models, further accelerating axiomatic fields.

- Redefinition of Authorship: As AI contribution becomes increasingly significant—generating proofs, synthesizing diagrams, and restructuring arguments—the academic community will be forced to formalize guidelines for AI authorship. Prism’s transparent documentation of AI assistance will be critical in shaping these new ethical standards.

Prism is not just a new software tool; it is a foundational piece of infrastructure designed to manage the complexity and accelerate the velocity of the modern research enterprise. By seamlessly integrating the raw computational power of GPT-5.2 with the demanding, structure-specific requirements of scientific workflow—especially through features like robust LaTeX and context management—OpenAI has created a platform that promises to transform the research laboratory into a highly optimized, digitally augmented engine of discovery. The successful integration of Prism into global scientific practice will be the ultimate test of whether 2026 truly becomes the inflection year for AI in science.