The bedrock of enterprise identity management remains firmly rooted in Microsoft Active Directory (AD). Despite the migration toward cloud-native identity providers, the vast majority of organizational assets, permissions, and user accounts are still governed by this decades-old infrastructure. This ubiquity makes AD an enduring, high-value target for malicious actors. What has fundamentally shifted in the current threat landscape is not the objective—compromising credentials to gain lateral movement—but the speed, sophistication, and sheer accessibility of the tools used to achieve it, primarily driven by the proliferation of generative artificial intelligence (GenAI).

Generative AI is not merely an incremental improvement in cyber offense; it represents a disruptive force that democratizes high-end cracking capabilities. Historically, crafting highly effective password guessing attacks required deep knowledge of cryptographic weaknesses, proficiency in specialized cracking software, and access to substantial, often bespoke, computational resources. Today, GenAI abstracts these barriers, lowering the cost of entry for malicious activity while simultaneously escalating the effectiveness of attacks against even moderately secured credentials. This evolution signals a critical inflection point for cybersecurity posture management, demanding a wholesale re-evaluation of legacy identity controls.

The Paradigm Shift: From Brute Force to Behavioral Prediction

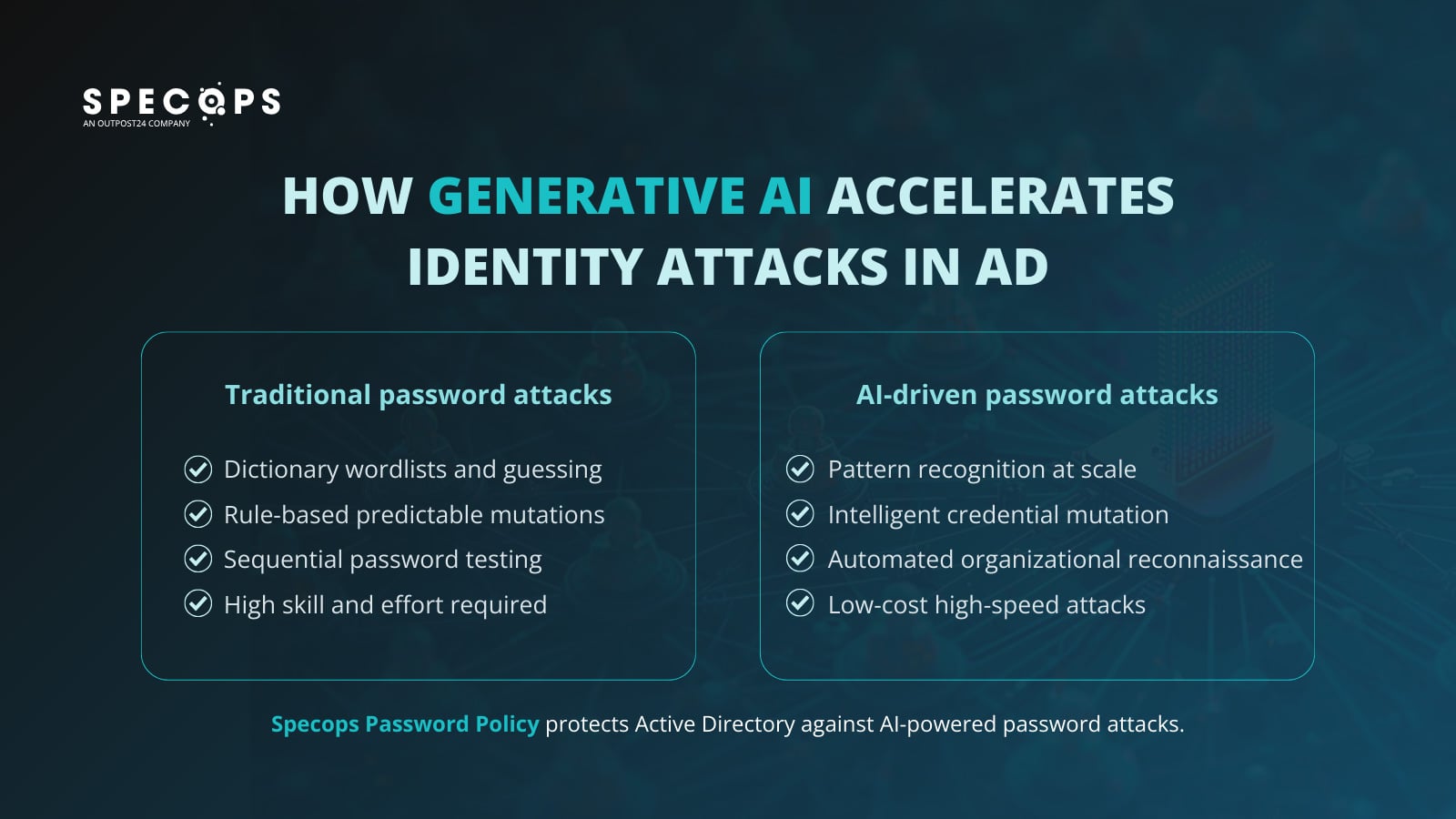

Traditional password attacks, even advanced dictionary or hybrid attacks, operated on discernible, deterministic rules. Attackers relied on known weak wordlists and applied pre-defined, rule-based mutations—the classic substitution of ‘S’ for ‘$’, or appending ‘123’ to a common term. This methodology was slow, resource-intensive, and often inefficient against modern hashing schemes, even if it succeeded against poorly configured systems.

Generative AI models, particularly those employing adversarial training techniques, operate on a completely different principle: statistical prediction based on observed human behavior. Tools leveraging these architectures, such as advanced iterations inspired by models like PassGAN, do not guess randomly or follow fixed rules. Instead, they are trained on massive datasets of compromised passwords, both public and potentially proprietary breach data. The AI learns the underlying grammar of human password construction.

The statistical output of these models is profoundly more potent. Research indicates that these AI-driven generators can identify and crack a significant percentage of commonly used passwords in minutes, not weeks. This speed is magnified when the models are contextualized. If an attacker can feed an AI tool data scraped from an organization’s public-facing social media profiles, internal Slack leaks, or even previous minor breaches, the resulting password candidates move beyond generic dictionary terms to highly personalized, contextually relevant guesses that adhere to the specific linguistic patterns of the target employee base. This level of precision transforms a probabilistic attack into an almost deterministic one for predictable patterns.

The Convergence of AI Models and Hardware Availability

The acceleration of these identity attacks is compounded by an unintended byproduct of the broader AI revolution: the hyper-availability and cost-efficiency of high-performance computing hardware. The demand for training large language models and complex neural networks has driven down the rental cost for powerful Graphics Processing Unit (GPU) clusters.

Attackers are no longer constrained by owning expensive, specialized hardware. For a relatively low hourly rate—comparable to a few hours of standard cloud computing—an adversary can rent cutting-edge GPU arrays capable of processing cryptographic hashes at unprecedented speeds. Modern GPUs, like the high-end consumer cards often favored for their computational density, can process bcrypt hashes (a standard algorithm for securing AD password hashes) significantly faster than the hardware available just a few years ago.

This combination is lethal: highly intelligent, behaviorally informed password guesses (the AI output) are tested against vast computational power (the rented GPU cluster) at minimal operational cost to the attacker. The result is a dramatic compression of the time window available for an organization to detect and remediate credential compromise, especially for accounts utilizing weak or moderately complex passwords.

Industry Implications: The Obsolescence of Legacy AD Controls

The foundational password policies implemented across most Active Directory environments were architected during an era when computational power was the primary bottleneck for attackers. These policies focused heavily on complexity—mandating the inclusion of uppercase, lowercase, numbers, and special characters.

In the context of GenAI, these complexity rules are counterproductive. They enforce a limited, predictable permutation space that AI models are explicitly trained to map. A password like "ProjectTitan!2024" perfectly satisfies a traditional complexity mandate but is trivially recognizable by an AI that understands corporate nomenclature ("ProjectTitan") and temporal sequencing ("2024"). The model doesn’t need to brute-force; it predicts the specific variant the user chose based on the enforced complexity pattern.

Furthermore, mandatory, time-based password rotation (e.g., every 90 days) has proven to be an ineffective defense against AI-augmented attacks. When employees are forced to change passwords frequently, they rarely generate truly random strings. Instead, they engage in subtle, predictable variations of their previous passwords—incrementing a number, swapping a month name, or slightly altering a substitution. AI systems, particularly when cross-referenced with breach data, excel at identifying these "evolutionary" patterns across successive password generations, rendering rotation merely a data enrichment exercise for the attacker.

Multi-factor Authentication (MFA), while a crucial layer of defense, is not a panacea for password hygiene issues. MFA mitigates the immediate risk of a discovered plaintext password, but it does not protect against sophisticated adversary techniques that circumvent the MFA prompt itself. Session hijacking, credential stuffing against already compromised accounts, or increasingly common MFA fatigue/bombing attacks can still grant an attacker access if they possess a valid, known credential pair. If the initial foothold relies on a cracked password, the entire AD structure remains vulnerable once MFA is bypassed.

Moving Beyond Compliance Checkboxes: The Rise of Contextual Defense

To effectively counter this new wave of AI-assisted identity attacks, organizations must pivot their defensive strategies from superficial compliance checks to deep contextual understanding of credential strength and exposure. The core defensive philosophy must shift toward metrics that AI struggles to model: length and genuine entropy.

Length as the New Complexity Barrier: Adversarial AI models, while adept at pattern recognition within constrained length parameters, encounter significant difficulty when confronted with true randomness across extended strings. An 18-character passphrase constructed from four seemingly unrelated, random words (e.g., "Purple-Lantern-Elephant-Wrench") possesses an entropy level that forces the generative model back toward computationally expensive brute-forcing, effectively neutralizing the AI advantage. Policy enforcement must prioritize minimum length requirements, perhaps setting the floor at 16 characters or more, favoring passphrases over complex short strings.

The Criticality of Breach Visibility: The most significant vulnerability in the AI era is not a password that can be guessed, but one that has already been compromised. If an employee’s credential combination appears in a publicly disclosed breach dataset, an attacker requires zero cracking effort. They move directly to credential stuffing or direct use. This bypasses all hashing sophistication, complexity rules, and even some MFA defenses if the session token is stolen.

Defending against this requires continuous, proactive monitoring of external breach intelligence. Security teams must deploy solutions capable of ingesting and cross-referencing internal AD password hashes or plaintext credentials against continually updated databases of known compromised credentials—databases now containing billions of entries harvested from global data leaks. Protection must be active, blocking the reuse of known bad credentials before they are set or used for authentication. Real-time updates are essential, as new compromises flood the dark web hourly.

Furthermore, the AI-driven reconnaissance phase demands defenses that block organizational context. Attackers use AI to identify internal jargon, product names, executive titles, and common acronyms used within a specific company. By implementing custom, organization-specific "deny lists" within the password policy engine, organizations can proactively block credentials that are highly likely to have been generated through context-aware guesswork, even if they happen to satisfy length or complexity rules.

Assessing Exposure in the AI-Augmented Landscape

Implementing advanced controls without understanding the existing risk profile is akin to treating symptoms without diagnosing the disease. Organizations need a clear, non-disruptive assessment of their current Active Directory exposure relative to modern threats. A comprehensive, read-only scan of the AD environment can map out where policy gaps exist, identify passwords that already reside in breach databases, and quantify the organizational adherence to modern entropy standards versus outdated complexity mandates. This assessment serves as the essential baseline for prioritizing remediation efforts against the most fertile ground for AI-assisted identity attacks.

The advent of generative AI in the offensive sphere has fundamentally shifted the risk calculus for identity management. It has compressed the timeline for credential compromise, lowered the barrier to entry for sophisticated attacks, and rendered many established security hygiene practices obsolete. The arms race is no longer about who has the fastest cracking rig; it is about who can best anticipate and disrupt the behavioral predictions generated by machine learning models. The imperative for security leaders is clear: defenses must evolve beyond mere compliance to embrace true entropy, continuous breach intelligence, and context-aware policy enforcement, before the next batch of leaked credentials surfaces and is instantly weaponized by AI.