The global technology landscape is currently defined by a tectonic shift: the explosive demand generated by artificial intelligence infrastructure. While consumers building or upgrading personal computers have felt the immediate sting of dramatically inflated memory module prices—with certain kits costing two to three times their previous valuation—this economic turbulence is not confined to the desktop sector. The same upstream pressures squeezing the PC market are now fundamentally altering the Bill of Materials (BOM) and feature roadmap for the next generation of mobile computing devices. Smartphone users, often feeling insulated due to the sealed nature of their devices, must now confront the reality that memory market dynamics, driven by massive data center investments, will inevitably trickle down to their next handheld purchase.

The Underlying Economics: A DRAM Hierarchy Under Strain

To understand the impact on mobile, one must first grasp the current state of the Dynamic Random-Access Memory (DRAM) manufacturing sector. The industry is dominated by three key players—Samsung Electronics, SK Hynix, and Micron Technology—who collectively control over three-quarters of global production. Historically, these manufacturers navigated cyclical demand curves, expanding capacity during peaks and contracting during troughs. However, the current environment is dominated by a single, overriding priority: Artificial Intelligence (AI).

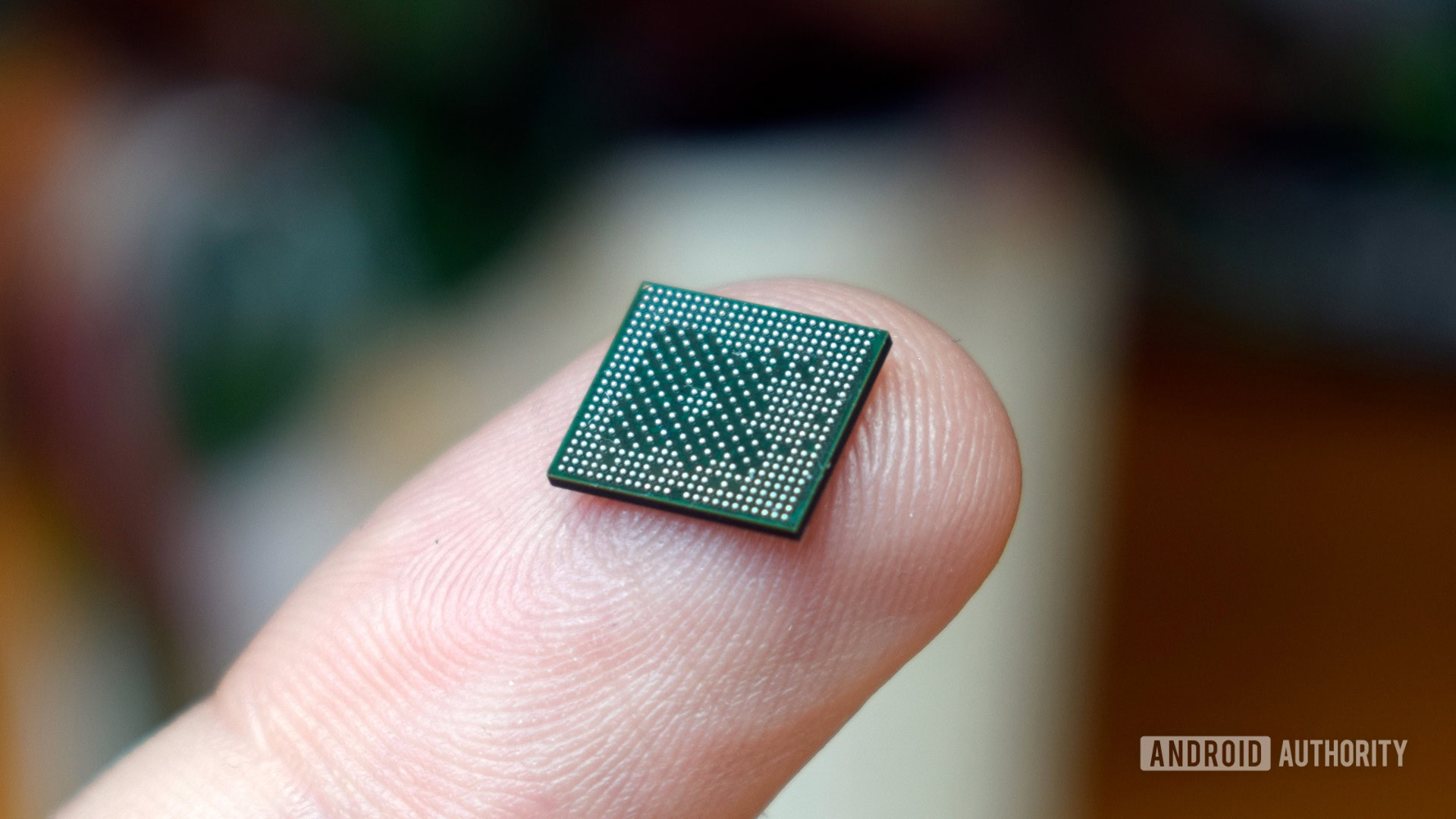

AI training and inference necessitate colossal amounts of memory, specifically High Bandwidth Memory (HBM). HBM is purpose-built for accelerators like NVIDIA’s H100 and GH200 GPUs, offering unparalleled throughput crucial for handling vast neural network models. Crucially, HBM is fabricated using the same advanced semiconductor process nodes employed for standard DDR5 and LPDDR (Low-Power Double Data Rate) memory.

This manufacturing overlap creates an intense resource allocation conflict. Industry analysis confirms that HBM offers DRAM manufacturers profit margins significantly higher—often two to five times greater—than traditional server (DDR) or mobile (LPDDR) memory. Consequently, manufacturers have aggressively pivoted their capital expenditure and wafer allocation toward HBM production since 2023. This strategic prioritization means less capacity is dedicated to the memory types essential for consumer electronics.

The scale of AI commitment is staggering. Recent industry reports detailing long-term supply agreements, such as those involving OpenAI’s anticipated "Stargate" infrastructure, project memory wafer requirements that could consume nearly 900,000 DRAM wafers per month if fully realized. While these figures represent letters of intent rather than cemented orders, they signal an overwhelming long-term commitment from hyperscalers, effectively establishing mobile and standard PC memory as a tertiary concern in the production hierarchy.

Furthermore, the scarcity is exacerbated by manufacturing caution. Following painful oversupply corrections in 2018 and 2022, DRAM suppliers are exhibiting pronounced margin discipline. They are deliberately eschewing rapid, large-scale capacity expansions that could risk triggering another price crash. This calculated restraint means that even as current memory prices soar, the industry is reluctant to flood the market, suggesting that the tight supply conditions are structurally engineered to persist.

The Mobile Connection: LPDDR in the Crosshairs

While smartphones utilize LPDDR5 or LPDDR5X, which are optimized for power efficiency rather than the raw bandwidth of server DDR5 or HBM, they are not immune. LPDDR chips are produced on the same cutting-edge process nodes. When fabrication plants (fabs) are running at maximum throughput to satisfy the high-margin HBM contracts, the allocation pool for LPDDR shrinks commensurately. Mobile DRAM, occupying the bottom rung of this profitability ladder, faces the most significant squeeze.

This situation directly impacts the Bill of Materials (BOM) costs for Original Equipment Manufacturers (OEMs). Research from firms like Counterpoint indicates that smartphone build costs are already projected to see increases ranging from 10% to 25% by the end of the current year, with further hikes of 10% to 15% anticipated by mid-2026, directly attributable to memory chip price inflation. This pressure is particularly acute in the budget and mid-range segments, where component costs represent a larger percentage of the final retail price, and profit margins are already razor-thin.

The timeline for relief is extended. Major capacity additions, such as SK Hynix’s M15X facility and Micron’s new Idaho fabs, are scheduled to come online in 2026 and 2027, respectively. Given the lead time required for these facilities to ramp up to full operational efficiency, meaningful price stabilization in the consumer memory sector may not materialize until 2028. This timeline dictates that the hardware decisions made for flagship devices launching in 2026 and budget devices in 2027 will be made under sustained cost duress.

Industry Implications: OEM Strategy Adjustments

Faced with unavoidable increases in key component costs, smartphone manufacturers possess limited mechanisms to preserve profitability without alienating their customer bases through outright price hikes. These mechanisms involve strategic trade-offs across memory configuration, software optimization, and feature prioritization.

1. RAM Capacity Downgrades: The most direct response is a reduction in the standard memory configuration shipped with new devices. While high-end flagships have normalized at 12GB or 16GB of LPDDR, the current scarcity suggests these configurations may become scarce or relegated only to the absolute top-tier "Ultra" models. Evidence of this trend is already visible, with reports indicating production delays for certain handheld gaming consoles specifically due to the lack of affordable memory supply. Consumers should anticipate a potential regression in base-model RAM, with 8GB becoming the new high-water mark for many standard flagships, and a potential reintroduction of 6GB or even 4GB configurations in the entry-level market—a move that carries significant implications for multitasking and future operating system overhead.

2. Marketing Shift to Storage: To counteract the narrative of "less RAM," OEMs may pivot marketing emphasis toward higher internal NAND flash storage capacities. A device offered with 8GB of RAM and 256GB of storage might be positioned more aggressively against a competitor’s 12GB/128GB model, shifting the perceived value proposition away from instantaneous working memory toward long-term data capacity. This tactic relies on consumers valuing storage over RAM availability for their typical usage patterns.

3. Increased Reliance on Software Workarounds: The industry will likely increase its reliance on sophisticated memory management techniques. Technologies like ZRAM (compressed RAM storage) and proprietary "Memory Extension" features, which leverage fast UFS storage to act as virtual swap space, will move from being niche selling points to essential operational components. While these software solutions can mitigate the immediate impact of lower physical RAM, they introduce latency penalties and place higher sustained demands on the flash storage subsystem. Expert analysis suggests that while software compression is effective, it cannot fully substitute for physical DRAM bandwidth, particularly under heavy multi-tasking loads or demanding AI applications executed on-device.

4. Feature De-Contenting: For brands operating in highly price-sensitive segments (mid-range and budget), the higher BOM cost of memory may necessitate cost reductions elsewhere in the device architecture. This can manifest as the elimination of secondary camera modules, downgrades in display technology (e.g., moving from 120Hz OLED to 90Hz or 60Hz LCD), removal of premium features like wireless charging, or the abandonment of stringent ingress protection (IP) ratings. These compromises directly impact the overall value proposition of otherwise competent hardware.

Future Trajectories: Architectural Adaptation and Consumer Expectation

The current memory crisis forces a crucial re-evaluation of hardware development cycles. The expectation that annual smartphone refreshes will bring proportional increases in core specifications, particularly RAM, is now under threat. If OEMs cannot reliably source 16GB LPDDR modules at competitive prices, the iterative hardware improvement curve will flatten.

This scenario creates a unique opportunity for chipset designers (SoCs) to innovate around memory efficiency. Future System-on-Chips (SoCs) will need to feature more advanced on-die memory caching or highly optimized memory controllers that can extract maximum performance from lower physical LPDDR capacities. Success in this area could allow manufacturers to maintain performance parity despite lower RAM counts.

Furthermore, the distinction between high-end and mid-range devices may blur in terms of memory allocation. If the cost barrier is too high, flagships might only offer incremental RAM increases (e.g., 12GB standardizing instead of moving to 16GB), while budget phones are forced to retain older, less efficient LPDDR generations for longer periods to manage costs.

The consumer expectation curve, which has become accustomed to increasing RAM allocations as a sign of "future-proofing," must also adjust. Consumers may need to accept that the "sweet spot" for flagship RAM may settle around 12GB for the immediate future, or face significantly higher entry prices for configurations exceeding that threshold. The reality is that the AI revolution, while promising incredible computational advances, is currently starving the consumer electronics sector of essential building blocks. The devices launched in 2026 will likely bear the scars of this supply chain conflict, manifesting as higher prices, stagnant RAM tiers, or a subtle degradation in auxiliary features across the board. The era of guaranteed, year-over-year memory upgrades in mobile hardware appears to be temporarily suspended by the insatiable appetite of the cloud AI infrastructure.