Google is accelerating the global deployment of its most sophisticated artificial intelligence capabilities, announcing a significant expansion of natural language-based photo editing within Google Photos. This generative AI feature, which allows users to execute complex image manipulations using simple text prompts, is now rolling out across three critical geographic regions: India, Australia, and Japan. This move is not merely a feature update; it represents a major inflection point in how mass-market consumers interact with high-fidelity digital editing tools, effectively dissolving the barrier between casual photography and professional-grade post-production.

The core technology behind this expansion—first introduced in limited fashion to Google’s flagship Pixel ecosystem in the United States—translates colloquial human language into precise editing instructions. Instead of navigating intricate menus, adjusting multiple sliders for exposure, saturation, or object selection, users encounter a "Help me Edit" dialogue box upon selecting a photo. Here, they can input directives ranging from straightforward corrections to highly imaginative transformations. Examples of these powerful, yet simple, commands include requests such as, "remove the motorcycle in the background," "smooth the harsh shadow on my face," or the more ambitious, "change the sky to a vibrant sunset."

This capability extends far beyond basic filters. The underlying generative model, often referred to within the industry as a variation of Google’s potent image transformation architectures, enables nuanced adjustments that were previously confined to high-end desktop software like Adobe Photoshop. Users can command the AI to fix a friend’s pose, remove distracting objects like spectacles, or even address common photographic mishaps, such as instructing the system to digitally "open" the eyes of a person who blinked mid-shot.

The Strategic Imperative: Global Accessibility and Localization

The geographical selection of India, Australia, and Japan for this rollout underscores Google’s commitment to capturing diverse, high-growth, and technologically advanced consumer bases simultaneously.

In India, the expansion holds particular strategic importance. With hundreds of millions of smartphone users, the market is volume-driven, yet digital literacy across advanced software tools remains varied. By offering a conversational interface, Google bypasses the need for users to master complex graphical user interfaces. Crucially, Google is integrating extensive regional language support beyond English, including Hindi, Tamil, Marathi, Telugu, Bengali, and Gujarati. This deep localization ensures the editing tool is accessible to a massive population segment in their native tongues, significantly accelerating adoption and utility in a market where linguistic diversity is paramount.

For Australia and Japan, the feature targets a highly engaged, affluent consumer demographic already accustomed to premium mobile computing experiences. In these markets, the competitive advantage lies in speed and convenience. Users are demanding seamless integration of powerful AI tools into their daily workflows, favoring applications that minimize time spent on manual adjustments. This rollout positions Google Photos strongly against rival platforms and dedicated creative applications.

Technical Underpinnings: On-Device Intelligence

A key technical detail distinguishing Google’s implementation is the reliance on on-device processing for the actual image manipulation. The feature leverages the highly efficient Nano Banana image model (a conceptual name for a compact, device-optimized neural network), ensuring that the computational heavy lifting occurs directly on the user’s device. The minimum requirements are modest—any Android device running Android 8.0 or higher with at least 4GB of RAM—meaning the technology is not restricted solely to the flagship Pixel line.

This decentralization of processing power offers three significant advantages:

- Speed and Latency Reduction: By processing locally, the time delay associated with uploading high-resolution images to cloud servers, running the model, and downloading the result is virtually eliminated, crucial for a real-time editing experience.

- Reliability in Emerging Markets: In areas like India, where mobile connectivity infrastructure can be inconsistent or bandwidth-constrained, on-device editing ensures the feature remains fully functional even without a stable internet connection.

- Enhanced Privacy: Keeping the core generative editing process local minimizes the transfer of sensitive personal image data to Google’s cloud servers, addressing growing global concerns regarding data sovereignty and privacy.

Industry Implications: The End of the Editing Barrier

This mass deployment marks the definitive democratization of digital artistry. Historically, generative image editing was the domain of highly skilled professionals using expensive, license-based software. The shift to a natural language interface lowers the expertise barrier to zero. The user no longer needs to understand concepts like masking, layering, or generative fill algorithms; they only need to articulate their creative intent.

This trend forces established industry players, particularly Adobe, to reassess their mobile strategy. While Adobe has integrated generative AI (Firefly) into its ecosystem (including Adobe Express and mobile Photoshop), Google Photos benefits from its position as the default photo management and storage solution for billions of Android users. This integration means the powerful editing tools are available where the photos already live, eliminating the friction of exporting, importing, and switching applications.

Expert analysts suggest that this consumer-facing AI editing war is moving beyond simple feature parity toward ecosystem lock-in. Companies that can provide intuitive, powerful, and integrated AI tools within their core operating systems or default applications will gain a substantial advantage in user retention and data collection. The Google Photos strategy ensures that the user’s creative journey begins and ends within Google’s own infrastructure.

Furthermore, the expansion builds upon Google’s previous AI investments in the platform. Last November, the company significantly broadened its AI-powered search capabilities, extending smart object and context-based searching to over 100 countries and 17 languages. The recent addition of tools like "Meme me," which allows users to create personalized memes by combining their photos with existing templates, reinforces the platform’s transformation from a simple storage solution into a comprehensive, AI-driven content creation hub.

Addressing the Credibility Crisis: C2PA and Content Credentials

The rapid acceleration of generative AI capabilities introduces complex ethical challenges, particularly concerning authenticity and misinformation. As AI becomes adept at altering real-world imagery with high fidelity (e.g., removing objects, changing expressions, or generating entirely new scenarios), the need for transparency becomes paramount.

In response, Google is proactively rolling out support for the C2PA (Coalition for Content Provenance and Authenticity) Content Credentials standard in the newly supported countries. C2PA is an open, technical standard that provides verifiable metadata attached to digital content, indicating its origin and history of modification.

In Google Photos, this means that any image edited or created using the generative AI tools will carry embedded credentials signaling that AI was involved. This crucial metadata allows users, news organizations, and social media platforms to understand the provenance of an image. If a photo has been altered by asking the AI to "remove the crowd," the C2PA credentials will document that generative editing step.

This move aligns with broader industry efforts—including those by Meta and others—to label AI-generated and AI-modified content effectively. By adopting C2PA early and integrating it into a widely used consumer product, Google is setting a precedent for responsible AI deployment and helping mitigate the potential spread of deepfakes and digitally manipulated media, a critical requirement for maintaining platform trust in an increasingly synthesized digital world.

Future Impact and Trends: The Path to Multimodal Creativity

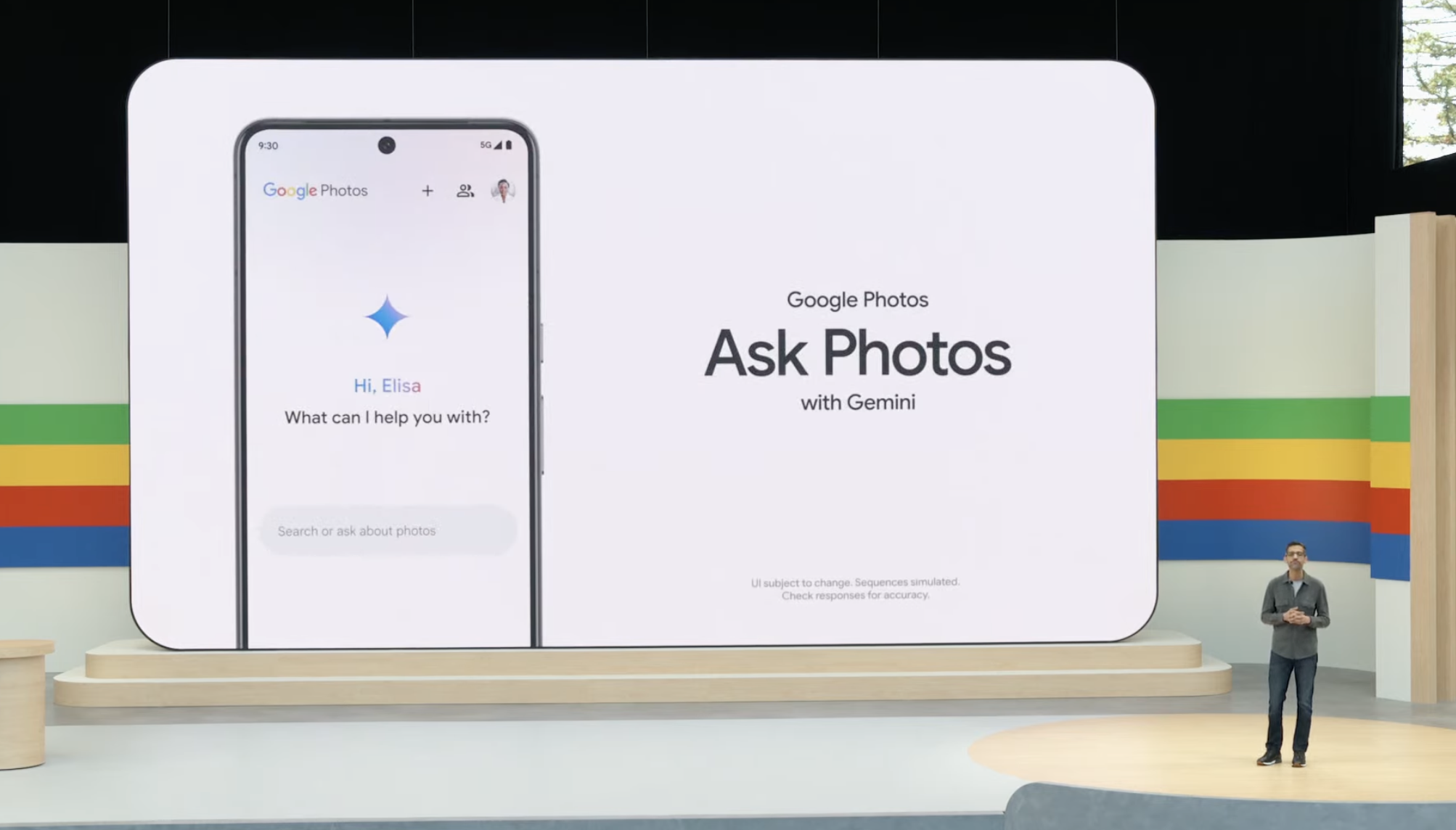

The deployment of conversational editing capabilities serves as a foundational layer for more complex future interactions. The immediate trend points toward the convergence of photo editing with the "Ask Photos" feature, which utilizes large language models (LLMs) to answer highly contextual questions about a user’s stored memories. Imagine a future prompt: "Show me all photos from my trip to Sydney where I am standing near water, then make the background less distracting and post it to Instagram." This combines retrieval, complex editing, and external application integration into a single conversational thread.

Furthermore, the success of prompt-based image editing is a strong indicator of the future of video editing. Video, computationally more demanding, will inevitably follow a similar trajectory, moving away from timeline-based editing toward natural language instructions. Users will soon be able to say, "Cut the video before the dog runs out of frame, stabilize the shaky parts, and add cinematic color grading."

The underlying shift is cognitive: users are moving from being operators of software to being directors of AI models. This evolution significantly increases the creative capacity of the average user, allowing complex, multi-step creative visions to be realized instantly.

In summary, Google’s aggressive international expansion of conversational AI editing is a strategic maneuver that positions Google Photos as the definitive, accessible, and ethically transparent AI creation platform for the global mass market. By pairing advanced, device-localized models with critical language and provenance support (C2PA), Google is not just updating an application; it is fundamentally reshaping the landscape of consumer digital media creation across some of the world’s most dynamic technology markets. The long-term implications point toward an era where complex digital artistry is a conversational commodity, available to anyone with a smartphone.