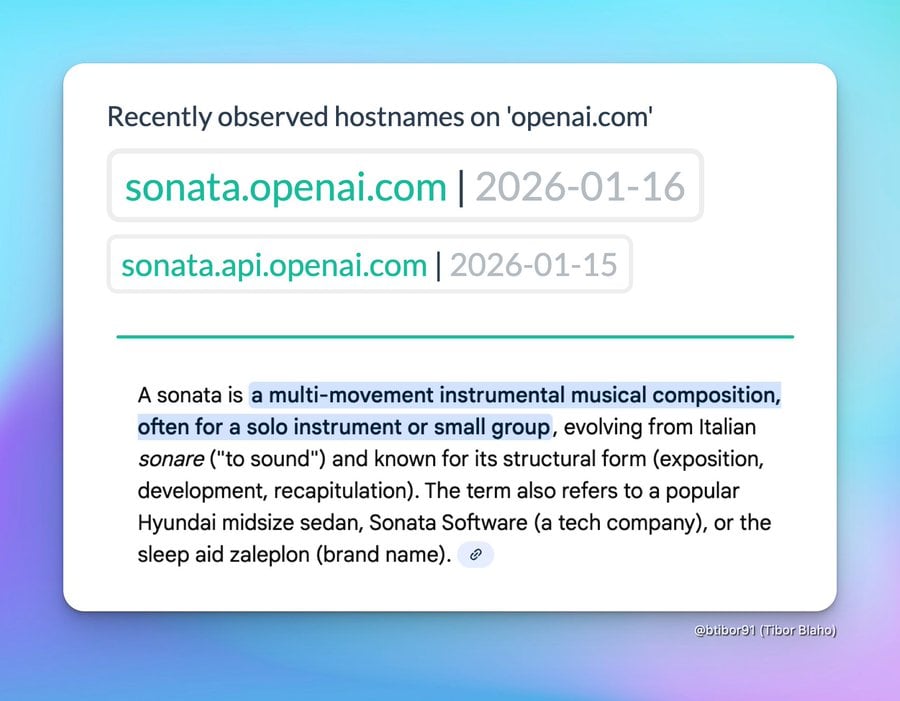

The digital breadcrumbs left in the wake of rapid technological development often provide the most tantalizing clues about future releases. In the ever-accelerating landscape of artificial intelligence, where major shifts can occur between product cycles, monitoring infrastructure changes has become a primary form of investigative journalism. Recently, observations of newly registered hostnames associated with OpenAI’s domain architecture suggest the active development of a significant new undertaking, internally designated by the codename "Sonata." These artifacts, specifically the provisioning of sonata.openai.com and its corresponding API endpoint, sonata.api.openai.com, signal the establishment of a dedicated, potentially externally facing service or a highly modular component integrated into the core OpenAI ecosystem.

The presence of these new subdomains, dated with future-looking registration timestamps (January 15th and 16th, 2026, for the API and main domain, respectively, as noted in external security research), confirms that OpenAI is actively allocating digital real estate for a project that requires its own distinct web presence and dedicated infrastructure scaffolding. In the context of large language models (LLMs) and multimodal AI, new hostnames rarely signify trivial updates; they generally preface the deployment of novel product tiers, specialized model endpoints, or entirely new user interfaces designed to handle distinct modalities of data interaction.

The choice of "Sonata" as a codename immediately invites speculation, particularly within the audio and music production communities. A sonata, in classical music theory, is a composition structure, usually involving multiple distinct movements played by a solo instrument or small ensemble. This inherent association strongly suggests an ambitious foray into audio processing, generation, or interaction within the ChatGPT framework. Given the industry’s current trajectory toward deeply integrated, multimodal AI experiences—moving beyond text-in, text-out—a dedicated audio initiative aligns perfectly with the competitive pressures facing OpenAI.

Contextualizing the Shift: The Multimodal Imperative

For context, OpenAI has spent the last few years steadily expanding ChatGPT’s capabilities beyond its initial text-only foundation. The integration of vision capabilities (GPT-4V) and, more critically, robust voice input and output functionalities has already begun blurring the lines between traditional software and ambient computing interfaces. The current dictation features, while functional, represent a foundational layer. "Sonata," if indeed tied to advanced audio, could represent the leap from basic transcription and voice response to genuine, high-fidelity generative audio capabilities.

This could manifest in several ways. One possibility is the development of a specialized model, perhaps an evolution of Whisper or a completely new architecture, optimized for complex, nuanced music generation or sophisticated sound design based on textual prompts. Imagine asking ChatGPT to generate a five-minute orchestral piece blending Baroque counterpoint with modern electronic textures, complete with specific emotional cues—a task requiring significantly more structural coherence and artistic understanding than current text-to-audio tools typically offer.

Alternatively, "Sonata" might refer to an advanced interactive audio workspace integrated into the ChatGPT platform. This wouldn’t just be about generation; it could involve real-time audio editing, mixing, mastering, or even collaborative composition sessions where the AI acts as a co-producer, capable of understanding and executing complex audio engineering instructions given conversationally. The dual hostnames—one for the web application (sonata.openai.com) and one for the API (sonata.api.openai.com)—suggest a comprehensive service, designed both for end-users through a polished interface and for enterprise integration via programmatic access.

Industry Implications: The Race for Generative Fidelity

The unveiling of a "Sonata" project would place significant competitive pressure on other major players. Google DeepMind, Meta, and specialized AI music startups are all investing heavily in generative audio. For OpenAI, securing a lead in this domain is crucial for maintaining its perception as the frontier innovator.

If Sonata delivers on high-fidelity, controllable audio generation, it immediately impacts several sectors:

- Creative Industries: Musicians, filmmakers, and game developers would gain an unprecedented tool for rapid prototyping, scoring, and sound effect creation. This democratization of high-end production tools, while exciting, also raises profound questions regarding intellectual property, authorship, and the displacement of entry-level creative roles.

- Interactive Media: Enhanced audio AI is essential for truly immersive virtual and augmented reality environments. A service like Sonata could provide dynamic, context-aware soundtracks that react instantly to user actions or narrative shifts within a simulation, moving beyond static background music.

- Accessibility and Communication: Advanced audio manipulation could lead to highly personalized synthetic voices that retain emotional nuance, crucial for accessibility tools or for creating digital avatars capable of deeply human-like speech patterns in customer service or educational contexts.

The API component is particularly telling. It signals that OpenAI views "Sonata" not merely as a consumer-facing ChatGPT add-on but as a foundational infrastructure layer ready to be licensed out to third-party developers building complex, proprietary applications requiring deep audio intelligence.

Expert Analysis: Deconstructing Codename Strategy

It is vital, as the original observation correctly notes, to treat codenames with caution. They are strategic misdirections as much as they are internal identifiers. However, OpenAI’s recent pattern of development—integrating memory, improving citation capabilities, and enhancing real-time interaction—suggests a consistent focus on making AI assistants more competent and less prone to hallucination or context loss.

The existing improvements, such as the recent feature allowing ChatGPT to reliably locate and cite specific details from past conversations, demonstrate a commitment to building reliable memory into the core product. This reliability is essential groundwork for any advanced feature. You cannot build a complex musical composition (a sonata) with an AI that cannot reliably recall the key signature established in the first movement. Therefore, "Sonata" might not just be a new capability, but a new level of contextual mastery applied to audio data.

From an architectural standpoint, dedicating a subdomain implies a separation of concerns. This often happens when a new service requires a different computational stack—perhaps specialized hardware accelerators optimized for audio processing (like custom TPUs or optimized GPUs clusters) that are distinct from the clusters primarily serving text inference. This modularity allows for independent scaling, deployment, and rigorous security auditing of the new feature without disrupting the core LLM service. The API subdomain confirms that this service will be callable directly, indicating a robust, production-ready back-end infrastructure is being prepared well in advance of public release.

Future Impact and Emerging Trends in AI Audio

The evolution hinted at by "Sonata" aligns with a broader industry trend: the shift from large, monolithic models to ensembles of specialized, highly efficient models working in concert. Instead of one massive model handling everything, we see specialized components handling vision, code interpretation, and, potentially, deep audio synthesis.

The success of "Sonata" will likely be judged on three key metrics: controllability, fidelity, and latency.

- Controllability: Can users direct the output with granular precision? Can they specify instrumentation, tempo shifts, harmonic progression, and dynamic range using natural language, or perhaps even by drawing conceptual sketches or providing reference audio snippets? If Sonata offers true artistic control, it becomes a disruptive force.

- Fidelity: Does the generated audio sound artifact-free and indistinguishable from human-created, high-quality recordings? Early generative audio often suffers from metallic artifacts or unnatural transients. Breaking through this fidelity barrier is the current holy grail.

- Latency: For interactive use cases—such as live accompaniment or real-time voice modulation—the delay between command and execution must be near-instantaneous. The dedicated API infrastructure suggests a strong focus on optimizing this speed for enterprise deployment.

Furthermore, the development of "Sonata" must run concurrently with intense legal and ethical scaffolding. Generative music models are facing increasing scrutiny over the provenance of their training data. If OpenAI intends for Sonata to be commercially viable, they must develop transparent mechanisms to ensure the generated output is not merely a complex remix of copyrighted material, or they must commit to training exclusively on licensed or public domain data, a massive undertaking in itself. This ethical layer will be as critical to the product’s long-term viability as its technical performance.

In conclusion, the discovery of sonata.openai.com is more than a simple domain registration; it is a strong indicator of OpenAI’s strategic next step into advanced, generative multimodal AI. While the name strongly suggests a revolution in music or complex audio interaction within the ChatGPT ecosystem, the infrastructure preparation points toward a modular, high-performance service ready for both consumer adoption and broad developer integration. The tech world watches keenly for the movements of this potential "Sonata," anticipating a composition that could redefine human-computer interaction in the auditory realm.