The leading edge of scientific endeavor today is characterized by breakthroughs occurring at both the foundational biological level and the zenith of computational complexity. In parallel, researchers are mastering the subtle, critical stages of human development in vitro while AI giants race to deploy models containing an unprecedented number of adjustable variables. These advancements—one deeply rooted in molecular biology and the other in digital engineering—present immense opportunities for medical understanding and global economic restructuring, but they also bring immediate, complex ethical and regulatory challenges.

The Genesis of Life: Modeling Human Implantation Ex Vivo

One of the most profound and historically opaque stages of human development is the moment of implantation, when a nascent embryo anchors itself to the uterine lining. This event—the formal initiation of pregnancy—is notoriously difficult to study, as it occurs hidden deep within the body and is often the point of earliest failure in both natural and assisted reproduction cycles.

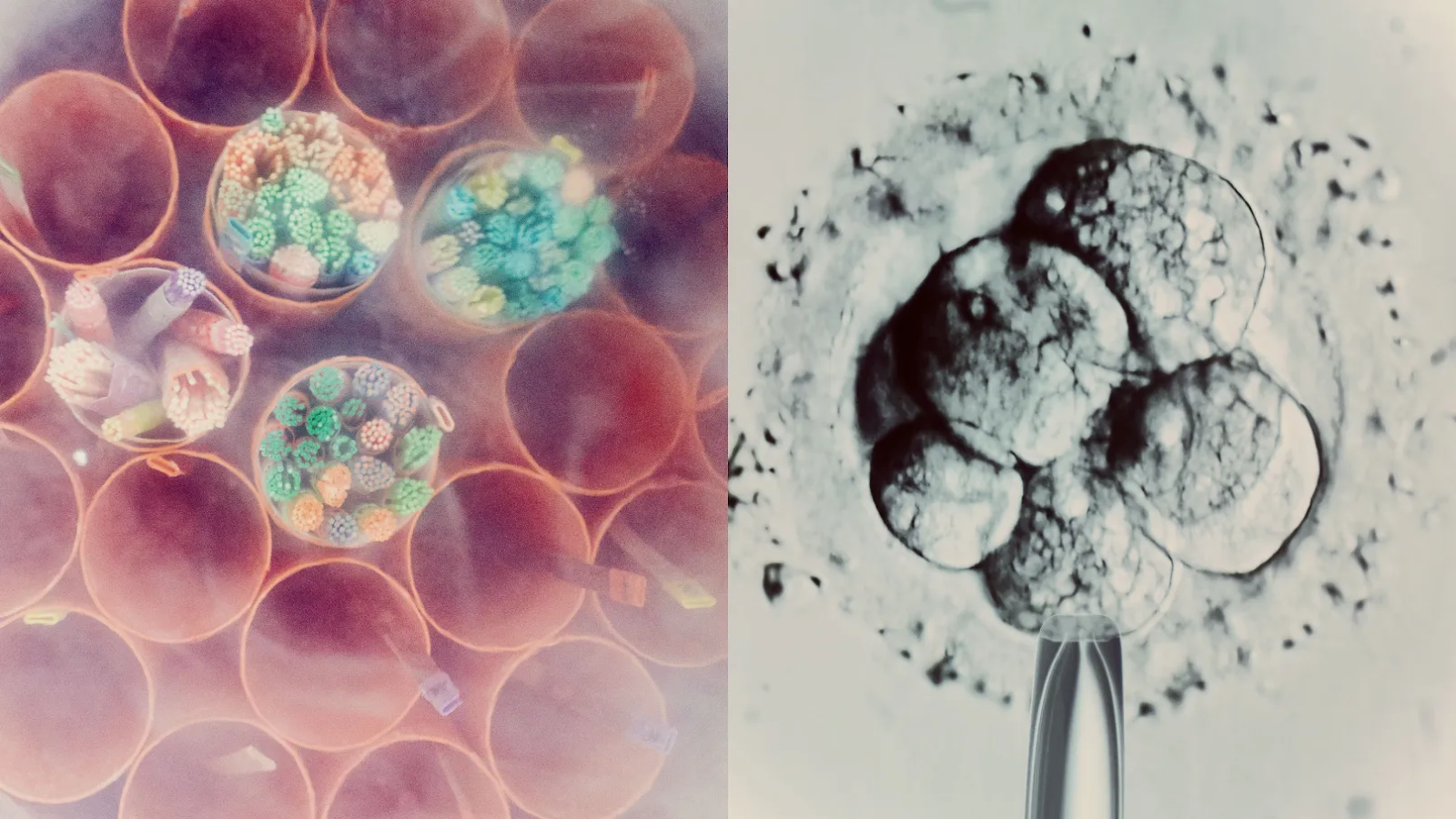

Recent published work, originating from laboratories in Beijing and reported across several Cell Press journals, details the most successful attempts yet to replicate this crucial biological milestone outside of a living organism. Scientists successfully merged human embryos, sourced from IVF facilities, with meticulously constructed "organoids." These organoids are three-dimensional cellular structures, engineered from endometrial cells—the very tissue that forms the uterine lining. The process takes place within sophisticated microfluidic chips, often referred to as ‘organ-on-a-chip’ systems, which mimic the physiological microenvironment, including necessary nutrient flows and biochemical signaling.

The images captured by these teams are startlingly similar to the natural process: a spherical blastocyst pressing against the pseudo-endometrium, grasping hold, and beginning the critical burrowing action that precedes the formation of the placenta’s first tendrils.

Industry and Ethical Implications of Synthetic Implantation

The ability to observe and manipulate implantation ex vivo marks a seismic shift in reproductive medicine and developmental biology. Previously, research into early pregnancy was severely limited, often relying on animal models which fail to capture the nuances of human cellular interaction. This new methodology provides a high-fidelity platform to:

- Investigate Recurrent Pregnancy Loss: Researchers can now study why certain embryos fail to implant successfully, offering potential diagnostic tools and therapeutic targets for the millions of couples struggling with infertility.

- Drug Toxicology and Teratogenesis: The system allows for the safe testing of pharmaceuticals to determine their impact on early placental development without exposing a patient or fetus to risk.

- Optimizing IVF: By better understanding the molecular dialogue between the embryo and the uterine lining, IVF protocols may be refined to significantly boost success rates.

However, this scientific triumph instantly reignites critical bioethical debates. By sustaining human embryos in an artificial environment for extended periods, scientists push against the established ethical boundaries, particularly the globally recognized "14-day rule," which historically capped in vitro culture time to prevent the development of a complex nervous system. While the primary goal of the current research is observational and therapeutic, the success in facilitating implantation in a lab setting inevitably raises questions about the future possibility of full gestation outside the human body, forcing legislators and ethicists to reconsider the fundamental definition of life and personhood in a technological context.

This complex ethical landscape is already strained by existing technology. Millions of human embryos created through In Vitro Fertilization (IVF) procedures currently reside in perpetual cryopreservation—a "strange limbo," as some experts describe it. These tiny balls of cells, holding the potential for life, represent a massive and growing logistical, legal, and moral challenge globally. As IVF success rates rise and storage technology improves, the population of cryopreserved embryos swells. Since there is no universal consensus on the moral status of these embryos—are they property, potential life, or something unique—patients, clinics, and legal systems struggle with questions of disposition, ownership, and responsibility, particularly in cases of divorce, death, or legislative changes that restrict reproductive rights. The advancement of implantation technology only underscores the urgency of establishing clearer, globally recognized governance structures for human reproductive material.

The Architecture of Intelligence: Demystifying the Trillion-Parameter Race

Shifting from the microscopic complexity of biology to the astronomical scale of digital intelligence, the performance of modern Large Language Models (LLMs) is inextricably linked to the concept of the ‘parameter.’ While often reduced to a metaphorical measure—the "dials and levers" controlling behavior—a parameter is, in reality, a fundamental component of the artificial neural network structure: a weight or a bias.

In a deep learning model, data flows through layers of interconnected nodes (neurons). Each connection between neurons is assigned a numerical value, or weight, which determines the strength and influence of that connection on the subsequent node. Additionally, a bias term is applied to each node, acting as an offset that helps the model fit data better. These weights and biases are the parameters. They are the variables that the model adjusts and optimizes during the intensive training process (backpropagation) when it processes billions of data points. They effectively encode the model’s learned understanding of language, logic, and the relationships within the massive training dataset.

The Economics of Scale and the Rise of Secrecy

The history of LLMs demonstrates a clear scaling law: generally, the more parameters a model has, the more capable and nuanced its outputs become. OpenAI’s groundbreaking GPT-3, released in 2020, set a high bar with 175 billion parameters. This scale was previously unimaginable, requiring vast computational resources (high-performance GPUs) and enormous energy consumption for training.

However, the subsequent AI arms race has dwarfed even GPT-3. Contemporary flagship models, such as Google DeepMind’s latest iterations (Gemini, for example), are rumored to operate on parameter counts well into the trillions—some analysts speculate figures as high as seven trillion parameters. The transition from billions to trillions represents not just an incremental improvement but a fundamental leap in complexity, enabling enhanced reasoning, multimodal integration, and greater contextual coherence.

Crucially, in the current fiercely competitive climate, the transparency that once defined AI research has evaporated. Unlike earlier models where academic papers detailed architecture and parameter counts, leading AI firms now treat these figures as closely guarded trade secrets. This secrecy poses significant challenges for external researchers, regulators, and industry analysts:

- Benchmarking Difficulty: Without knowing the precise architecture, comparing models becomes a matter of assessing output quality rather than technical efficiency or scale, hindering scientific validation.

- Resource Allocation: The jump to trillion-parameter models necessitates state-level capital investment in computing infrastructure (e.g., specialized AI chips and massive data centers). This concentration of resources further stratifies the industry, limiting advanced AI development to a handful of well-funded corporate and national actors.

- Safety and Auditing: The sheer complexity of models with trillions of parameters makes them increasingly difficult to audit for bias, safety vulnerabilities, or unexpected emergent behaviors, intensifying the "black box" problem. Understanding the internal mechanism—what makes the LLM "really tick"—becomes exponentially harder as the parameter count climbs.

Regulatory Turbulence and the Future of US Green Infrastructure

The technological narrative is frequently intersected and sometimes derailed by regulatory and political friction, a trend vividly illustrated by the recent setbacks plaguing the US offshore wind industry. As a linchpin of ambitious national climate goals, the development of wind farms off the East Coast represents massive investment and a critical step toward grid decarbonization.

Yet, the sector has been thrown into disarray by sudden legal and administrative challenges. A December directive from the executive branch ordered an immediate pause on the leases and construction of five major wind farms. The official justification centered on concerns over potential radar interference caused by the rotating turbines—an issue long recognized and, according to developers, one they had proactively worked with government agencies to mitigate for years through design adjustments and siting decisions.

This sudden administrative action, seen by many developers as politically motivated given the timing and context, instantly triggered a wave of lawsuits. The resulting court battles will determine the immediate viability of billions of dollars in invested capital and will set crucial legal precedents for the future of large-scale renewable energy infrastructure in US waters.

The Broader Context of Tech Governance

The offshore wind controversy highlights a crucial tension in modern technology implementation: the struggle between long-term strategic goals (like climate mitigation) and short-term political or technical resistance. If technical concerns, even known and managed ones, can be weaponized to halt critical infrastructure, the reliability of government partnership in future large-scale energy projects is severely undermined.

This regulatory friction is mirrored across the broader technology ecosystem:

- AI Liability and Social Harm: The settlement of multiple lawsuits involving major tech entities (like Google and Character.AI) related to the deaths of young users underscores the accelerating legal recognition of harm caused by algorithmic companions and highly addictive digital environments. As AI integrates more deeply into daily life, lawmakers are increasingly targeting the lack of safeguards, moving AI governance from theoretical discussion to concrete legal liability.

- Health AI Risks: While companies like OpenAI introduce specialized health features (e.g., ChatGPT Health for medical results analysis), the industry faces intense scrutiny regarding safety. Expert analysis indicates that while LLMs can perform basic triage, they frequently fail to provide adequate advice for complex or niche medical inquiries, particularly women’s health issues. Furthermore, the softening of explicit warnings that "chatbots are not doctors" suggests a troubling normalization of using inadequately validated AI for critical health decisions, creating a significant liability risk.

- Geopolitical Tech Choke Points: International mergers and acquisitions are increasingly being used as political leverage. China’s probe into Meta’s acquisition of Manus—a company specializing in virtual reality and haptics—is viewed by analysts as a strategic move designed to secure a bargaining chip in ongoing trade and technology disputes with the US. This friction delays innovation and underscores the global fragmentation of the tech market along geopolitical lines.

Conclusion: Navigating Convergence

The current technological moment is defined by radical convergence and scaling. We are perfecting the ability to initiate human life in a laboratory dish while simultaneously constructing digital brains of previously unthinkable size. These two extremes—the mastery of biological complexity and the explosion of computational power—will define the coming decades in medicine, economics, and human interaction.

The ability to study early human development in vitro promises cures for infertility and insights into congenital diseases, yet it demands immediate, difficult ethical consensus regarding the definition and protection of nascent human life. Concurrently, the proliferation of trillion-parameter LLMs offers unprecedented automation capabilities, but the proprietary nature and sheer scale of these models necessitate a new paradigm for regulatory oversight, ensuring safety and accountability before the technology fundamentally restructures the global workforce.

Whether the challenge is ensuring the ethical handling of millions of frozen embryos, defining the responsibility of a trillion-parameter AI, or guaranteeing the stable deployment of critical green infrastructure, the overarching theme remains consistent: rapid technological acceleration requires equally swift, authoritative, and globally coordinated governance to harness the benefits while mitigating the profound risks. The future is being constructed today, cell by cell and parameter by parameter, but its long-term viability rests on the strength of the ethical and legal frameworks we establish now.