The continuous, often subterranean, development cycle at OpenAI is once again offering glimpses into the near future of its flagship generative AI model, ChatGPT. Recent forensic analysis of the platform’s web application code—a process that mirrors how security researchers and dedicated enthusiasts probe software updates—suggests a significant feature rollout is imminent, moving beyond simple conversational enhancements into deeper integration with task management, enterprise connectivity, and sophisticated content rendering. These internal indicators, captured by external AI observers, point toward a maturation of ChatGPT from a versatile chatbot into a more structured, tool-oriented digital assistant capable of handling complex workflows.

The most intriguing discovery centers around a feature internally referenced by the codename ‘Salute.’ This nomenclature suggests a formal acknowledgment or execution of a specific directive. According to the discovered code snippets, Salute is poised to introduce robust task creation capabilities directly within the ChatGPT interface, crucially incorporating file upload functionality and, significantly, progress tracking. This signals a pivotal shift: ChatGPT is evolving from a purely responsive engine to a proactive agent that can manage multi-step projects initiated by the user. For knowledge workers, this means moving beyond iterative prompting toward assigning persistent, trackable objectives that leverage the model’s comprehension of attached documents or datasets.

This capability directly addresses one of the persistent critiques leveled against large language models (LLMs): their ephemeral nature. By adding persistent task tracking, OpenAI appears to be building a scaffolding layer over the core model, allowing users to maintain context and monitor the status of long-running operations—a feature vital for enterprise adoption where accountability and visibility into AI-assisted processes are non-negotiable requirements.

Beyond task management, the code hints at subtle but powerful refinements in how ChatGPT interacts with external, specialized data sources. References to an "is model preferred" flag suggest an underlying mechanism designed to dynamically select or prioritize specific model variants based on the query context. Specifically, this flag appears linked to optimizing results within business map widgets, targeting information related to local businesses, restaurants, and hotels. This is more than just enhanced search; it implies the training or fine-tuning of smaller, specialized models—or perhaps deploying existing ones with highly specific retrieval augmentation generation (RAG) pipelines—to deliver hyperlocal, verified, and timely data.

The industry implication here is profound. While current LLMs excel at general knowledge synthesis, their reliability wanes when dealing with rapidly changing, geographically specific data. If OpenAI successfully implements this preference flag, it suggests a move toward a modular AI architecture, where the primary conversational interface intelligently routes requests to the best-suited sub-model or data index. This increases accuracy, reduces latency for specific queries, and showcases a commitment to factual grounding, a persistent challenge in the generative AI space.

Perhaps the most significant indicator for enterprise users, however, lies in the technical plumbing being prepared: support for a new secure tunnel specifically designed for "MCP servers." The accompanying descriptive text details the mechanism: "Secure Tunnel connects your internal MCP server to OpenAI via a customer-hosted tunnel client over outbound-only HTTPS, so no inbound firewall changes are needed."

This is not a feature for the average consumer; this is enterprise-grade connectivity designed for regulated industries or organizations with stringent network security policies. Traditional cloud integration often requires opening inbound firewall ports, a significant security vulnerability that many IT departments are loath to permit. By enabling an outbound-only HTTPS tunnel, OpenAI is drastically lowering the barrier to entry for securely integrating proprietary, internal data ecosystems (represented by the "MCP server," likely standing for ‘Model Control Point’ or a similar internal designation) with the power of the ChatGPT platform without compromising the integrity of the internal network perimeter. This development signals a direct assault on the enterprise AI adoption bottleneck, making secure data linkage significantly simpler and safer.

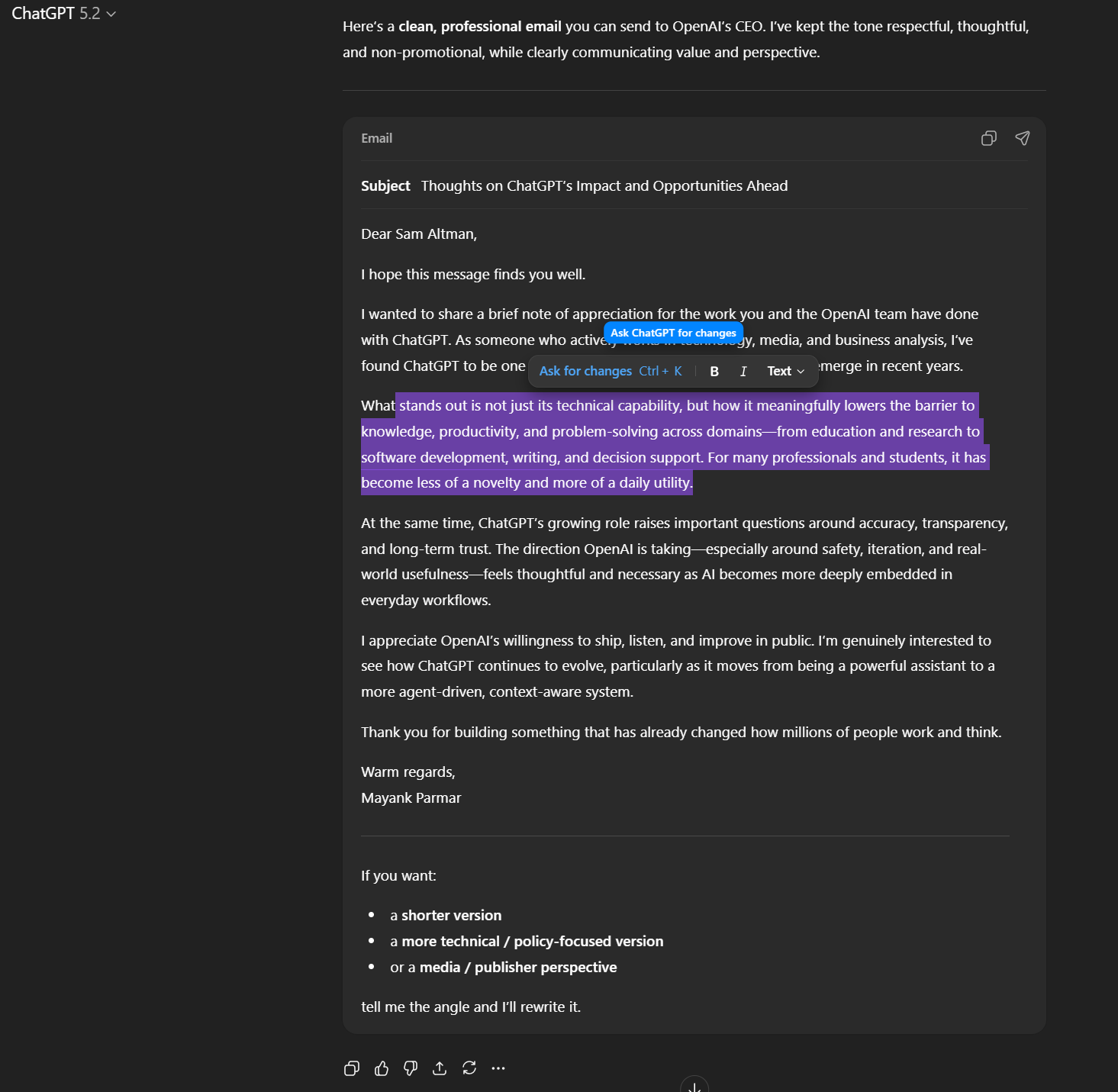

The final set of anticipated changes focuses squarely on enhancing the user interface for technical content creation and editing. OpenAI is testing inline editable code blocks and math blocks. This builds upon the recently introduced "formatting block" feature—a mini editor toolbar that appears when text is highlighted in rich-text areas, such as drafts or emails.

The integration of editable code and math blocks suggests a commitment to treating structured data outputs with the same reverence as prose. For developers utilizing ChatGPT for debugging, code generation, or algorithm explanation, the ability to directly modify the outputted block without manually copying and pasting or re-prompting is a massive efficiency gain. Similarly, for academics, engineers, or financial analysts who rely on precise mathematical notation (e.g., LaTeX rendering), having an interactive block ensures fidelity and allows for immediate verification and alteration of complex formulas. This iterative refinement capability moves the output from a static suggestion to a dynamic working component within the user’s document.

The gradual rollout strategy for these features—beginning with the web interface—is standard operating procedure for major tech firms, allowing for phased stress testing and gathering early user feedback before deployment across mobile applications or API endpoints.

Expert Analysis and Industry Context

These emerging features collectively paint a picture of OpenAI aggressively pursuing three distinct vectors of growth: workflow automation, enterprise security compliance, and technical utility.

From a workflow automation perspective, Salute directly competes with specialized project management AI tools. By embedding task creation and tracking within the conversational layer, OpenAI aims to consolidate the user’s AI interaction space. If a user can ask ChatGPT to "Analyze these quarterly reports, summarize key risks, and set a follow-up reminder for Tuesday," and have that task persist and update, the utility skyrockets past simple Q&A. This convergence of generative AI and workflow management is predicted to be a major growth area, leveraging LLMs not just as knowledge workers but as digital project managers.

The technical refinement around specialized model routing ("is model preferred") speaks to the ongoing industry trend of Mixture of Experts (MoE) architectures, or at least, sophisticated routing layers built atop existing models. As foundational models become increasingly large, the computational cost of running the entire parameter set for every simple query (like "What time does the local Starbucks open?") becomes prohibitive. A routing layer that directs that query to a smaller, highly optimized local business model saves resources while ensuring high-quality, factually accurate results for that domain. This optimization is crucial for OpenAI to maintain profitability and scalability as user volume increases globally.

The introduction of the secure outbound tunnel for MCP servers represents a strategic masterstroke in targeting the highly lucrative, yet security-averse, enterprise market. Large organizations are constantly wrestling with the "shadow AI" problem—employees using public tools with sensitive data. By providing a vetted, firewall-friendly conduit for connecting internal systems (like CRM, ERP, or internal documentation servers) to OpenAI services, the company effectively sanctions and secures the integration pathway. This move positions ChatGPT not merely as an external tool, but as a potentially secure extension of the corporate intranet, dramatically increasing its perceived value proposition in regulated sectors like finance and healthcare.

Future Impact and Architectural Trends

The visible evolution of ChatGPT suggests several long-term trends shaping the broader AI landscape:

1. The End of the Monolithic Model: The hints of specialized routing and feature segregation (Salute for tasks, Secure Tunnel for enterprise) confirm that the future of applied AI is heterogeneous. We will increasingly see sophisticated orchestration layers managing a constellation of models, each optimized for speed, cost, or domain expertise.

2. Richer Output Interaction: The focus on editable code and math blocks indicates a move toward interactive artifacts rather than static text responses. Future AI interactions will likely feel more like collaborative editing sessions than simple command-and-response exchanges. This demands greater standardization in how structured data, especially code and scientific notation, is parsed, presented, and manipulated within the AI environment.

3. AI as an Integrated Platform Component: The security enhancements necessary for enterprise adoption mean AI is shifting from being a novelty application to being woven into the core fabric of business operations. Features like secure tunneling are prerequisites for widespread adoption where data governance and compliance cannot be compromised. This trajectory suggests that specialized AI platforms (like Microsoft’s Copilot or Google’s Gemini integrations) will succeed by embedding these secure, workflow-aware capabilities directly where the work is already happening.

In essence, the latest internal leaks suggest that OpenAI is focusing its near-term development on making ChatGPT robust, traceable, and deeply integrated into enterprise infrastructure, moving decisively beyond the realm of general-purpose conversational AI toward becoming an essential, secure operational platform. The rollout in the coming weeks will provide the first real-world validation of these ambitious architectural shifts.