Cloudflare recently disclosed comprehensive findings regarding a disruptive 25-minute Border Gateway Protocol (BGP) route leak incident that specifically targeted IPv6 traffic. This event, which occurred on January 22nd, resulted in tangible network degradation, evidenced by measurable congestion, widespread packet loss, and an estimated aggregate drop of 12 Gigabits per second (Gbps) of data flow. While Cloudflare’s primary infrastructure remained operational, the propagation of faulty routing information extended its effects far beyond the company’s direct customer base, impacting the broader internet ecosystem.

To fully grasp the severity and mechanism of this incident, one must first appreciate the foundational role of BGP. BGP serves as the crucial inter-domain routing protocol of the internet, effectively the GPS system that dictates how data packets traverse the complex web of networks. These individual networks are defined as Autonomous Systems (ASes), which possess distinct routing policies and peering agreements. When an AS incorrectly advertises connectivity information—a "route leak"—it essentially misdirects traffic, forcing packets onto paths they were never intended to travel, often through networks lacking the capacity or authority to handle them.

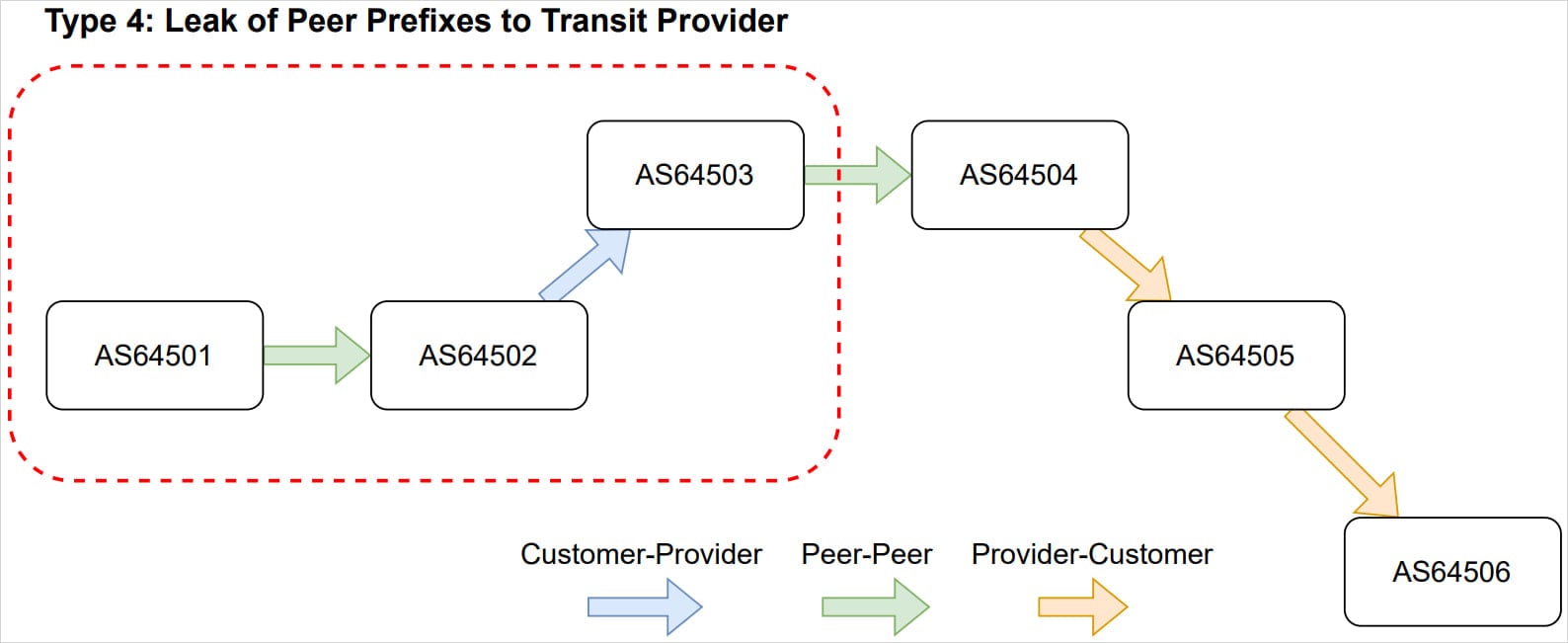

Cloudflare’s post-mortem analysis confirmed that the root cause stemmed from an inadvertent policy misconfiguration enacted on a core router. The company explicitly detailed that the leak involved the redistribution of routes learned from certain peers and providers in the Miami network region to other adjacent peers and providers. Based on the established definitions within RFC 7908, Cloudflare classified the resulting disruption as a composite event, involving both Type 3 and Type 4 route leaks.

Deconstructing the Technical Failure: Valley-Free Violations

The concept underpinning correct BGP operation is "valley-free routing." This principle dictates how routes should propagate based on established business relationships (e.g., customer-provider, peer-to-peer). In a standard topology, traffic should flow "downhill" from providers to customers, never "uphill" from a customer back to a provider, which constitutes a valley violation. When these rules are breached, as happened in this instance, traffic is attracted to suboptimal or non-existent paths, leading directly to the observed congestion and discards.

Specifically, the incident manifested as a Type 4 route leak, which, according to the diagrammatic representations used by network engineers, involves an AS incorrectly advertising routes learned from one peer or provider to another peer or provider that is not meant to receive those specific advertisements. This is particularly problematic because external networks often employ strict ingress filtering based on these established relationships. If an upstream network receives a route advertisement from a peer that it normally expects only from a customer, it may discard the traffic entirely, as seen by the 12 Gbps loss figure.

Cloudflare pinpointed the genesis of the error to a specific policy modification. The intent of the change was defensive: to prevent the Miami location from erroneously advertising specific IPv6 prefixes associated with Bogotá. However, in executing this modification, engineers inadvertently removed critical, specific prefix lists. This created an overly permissive export policy. The flawed configuration allowed an internal routing match (route-type internal match) to accept all internal (iBGP) IPv6 routes being processed within the backbone and subsequently export them externally to all BGP neighbors connected in the Miami region.

The consequence was immediate and widespread for IPv6 connectivity in that geographical area. All internal IPv6 prefixes managed and distributed by Cloudflare across its backbone were mistakenly broadcast to its external BGP peers, overwhelming their capacity and violating their established routing expectations.

Industry Context and Historical Precedent

This incident is not an isolated anomaly in the complex world of internet routing. BGP route leaks are a persistent threat, often occurring due to human error during configuration changes, software bugs, or, more maliciously, during BGP hijacking attempts. While this event was accidental, the resulting network behavior shares characteristics with more malicious exploits.

Historically, BGP instability has led to massive service disruptions globally. The reliance on BGP stems from its decentralized nature—no single entity controls global routing. This decentralization is its strength but also its vulnerability. When a large, globally significant network like Cloudflare, which handles a substantial portion of internet traffic, introduces an error, the ripple effect is magnified across continents.

Cloudflare noted the strong similarity between this January incident and a significant outage experienced in July 2020. That previous event, while also rooted in BGP configuration issues, underscores the recurring challenge in managing the intricate, often legacy, configuration languages used across global routing infrastructure. The fact that a configuration error causing a route leak recurs, even with high-profile mitigation strategies in place, highlights the inherent difficulty in maintaining flawless control over vast, distributed networks.

Security Implications Beyond Availability

While the immediate impact was a denial-of-service condition characterized by congestion and packet loss, the security implications of route leaks cannot be overstated. Route leaks fundamentally compromise the integrity of the routing table. If an attacker can induce or exploit such a leak, they can direct traffic intended for a legitimate destination through an AS under their control. This technique, known as BGP hijacking, allows for passive interception, inspection, and potential modification of data in transit.

In the case of a Type 3 or Type 4 leak, traffic might not be completely hijacked but is certainly subject to increased latency and potential inspection as it bounces across unintended intermediate networks. For sensitive data flows, even temporary exposure to an unvetted network path constitutes a severe breach of trust and policy.

Remediation and Forward-Looking Safeguards

The response time by Cloudflare’s engineering team was relatively swift, demonstrating high operational readiness for routing emergencies. Detection occurred quickly, followed by manual intervention to revert the faulty configuration and temporarily pause automated configuration deployment systems. This deliberate pause prevented the error from propagating further or being compounded by automated fixes, successfully halting the network impact within 25 minutes. Subsequently, the erroneous code change was fully reverted, and automation was safely reinstated.

In the wake of the incident, Cloudflare has outlined a proactive roadmap designed to harden its BGP configuration processes against recurrence. This plan focuses on layered defenses:

- Stricter Community-Based Export Safeguards: Implementing more granular and context-aware tags (BGP communities) on route advertisements to ensure that routes are only propagated under very specific, pre-approved conditions, minimizing the "catch-all" effect seen in the recent failure.

- CI/CD Checks for Policy Errors: Integrating rigorous Continuous Integration/Continuous Deployment (CI/CD) pipelines specifically for network configuration changes. This involves automated validation, simulation, and peer review before any policy is pushed to live routers, aiming to catch logical errors like the overly permissive export rule pre-deployment.

- Improved Early Detection: Enhancing internal monitoring systems to flag deviations from expected routing behavior—such as unexpected route advertisements to specific peers—faster than relying solely on external reports or generalized performance metrics.

- RFC 9234 Validation: This RFC addresses mechanisms for mitigating BGP route leaks. Incorporating validation procedures related to this standard ensures that internal routing practices align with industry-developed best practices for leak prevention.

- Promoting RPKI ASPA Adoption: Resource Public Key Infrastructure (RPKI) and its Address Space Public Key Infrastructure (ASPA) extension are critical next-generation tools. ASPA explicitly defines which ASes are authorized to originate prefixes for a given address block. Widespread adoption of ASPA by Cloudflare and its peers will provide a cryptographic layer of trust, allowing networks to reject routes that violate the explicitly stated business relationships, effectively neutralizing the threat posed by accidental policy errors like the one witnessed.

Broader Industry Implications and the Future of Routing Security

This incident serves as a potent reminder that despite decades of standardization, the global internet remains fundamentally reliant on human-managed configuration files interacting with complex protocols. The impact on IPv6 highlights a persistent operational gap: as newer protocols (like IPv6) are deployed, their management often inherits the complexities and potential pitfalls of legacy systems (like BGP configuration).

For Content Delivery Networks (CDNs), cloud providers, and major Internet Exchange Points (IXPs), the lesson is clear: configuration management must evolve beyond simple change control. The industry is gradually moving toward source-of-truth automation where network intent is codified in high-level languages, which are then compiled into device-specific configurations, allowing for automated verification against policy violations before deployment.

The move toward RPKI ASPA is perhaps the most significant long-term mitigation strategy. While BGP itself is a protocol built on trust, ASPA introduces verifiable trust anchors. If Cloudflare’s Miami peers had fully validated the ASPA records for the prefixes Cloudflare incorrectly advertised, those routes would have been immediately discarded at the ingress point, preventing the leak from cascading across the wider internet.

Ultimately, the 25-minute disruption caused by a simple, accidental policy rollback underscores the fragility inherent in internet infrastructure. While Cloudflare’s rapid containment mitigated catastrophe, the event reaffirms the industry-wide imperative to accelerate the adoption of cryptographically verifiable routing security mechanisms to build a more resilient and trustworthy global network fabric. The ongoing commitment to enhanced tooling, stricter automation gates, and broader RPKI/ASPA deployment will be the true measure of whether these lessons are learned and permanently integrated into operational security postures.