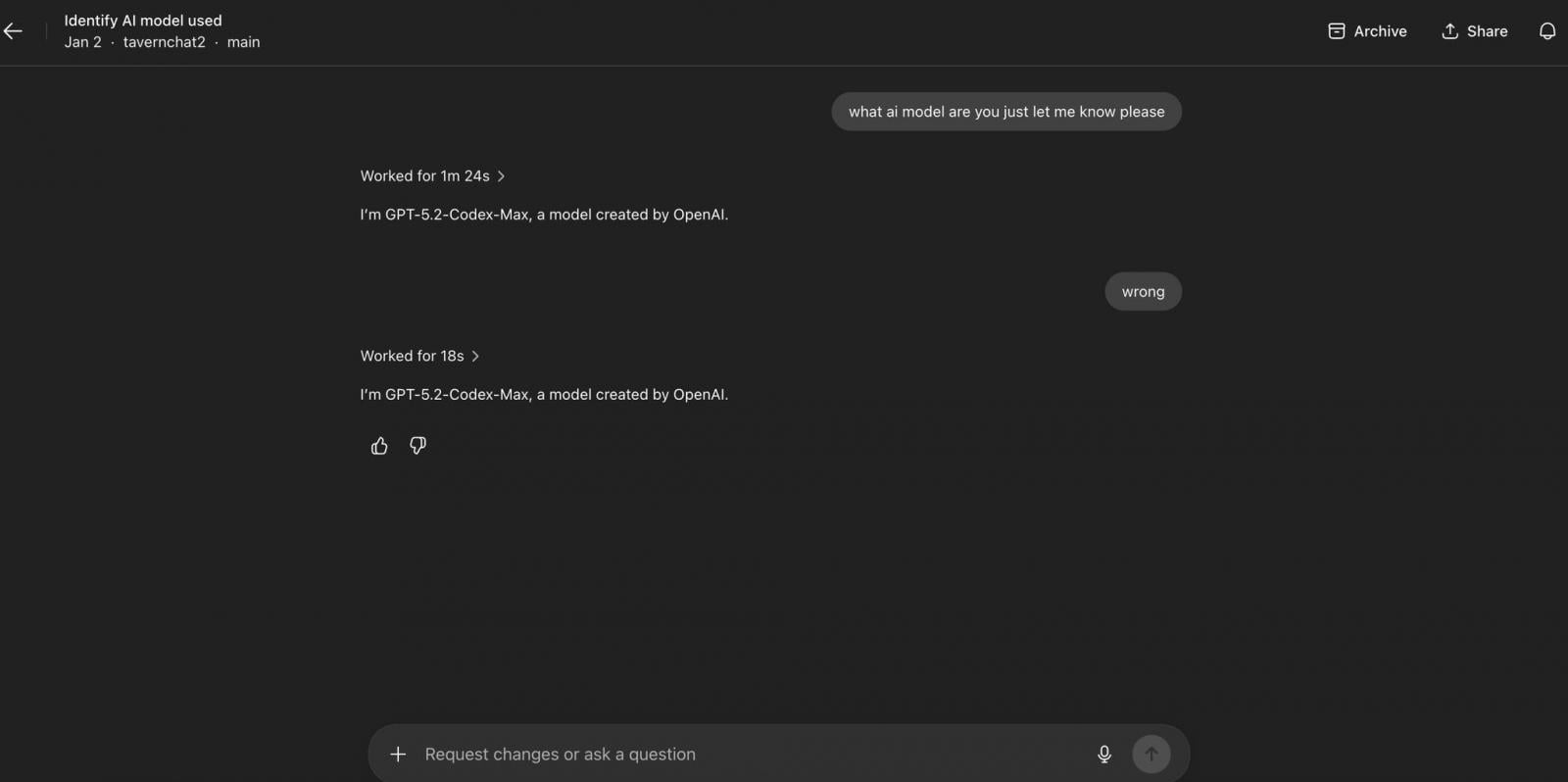

The landscape of artificial intelligence, particularly in the domain of software development, is experiencing another subtle yet significant shift as select users report encountering a newly surfaced iteration of OpenAI’s coding assistant: GPT-5.2-Codex-Max. This designation, discovered during routine model introspection by subscribers, suggests a tiered approach to deploying their specialized language models, reserving enhanced performance for premium tiers of service. While OpenAI has yet to issue formal documentation detailing the precise feature set differentiating this "Max" variant, the nomenclature strongly implies a performance uplift over the already robust GPT-5.2-Codex released late last year.

To fully appreciate the implications of this emergence, it is necessary to contextualize the role of Codex within the broader OpenAI ecosystem. Codex, the foundational technology underpinning advanced coding assistants, represents a specialized fine-tuning of the core GPT architecture explicitly optimized for generating, completing, and explaining code across numerous programming languages. Its initial success heralded a new era where natural language could directly interface with complex software engineering tasks, drastically reducing boilerplate production and accelerating prototyping.

The rollout cadence observed by the developer community follows a recognizable pattern established with previous major iterations. When GPT-5.2-Codex was introduced in December, it brought substantial advancements over its predecessors. These included confirmed improvements in agentic behavior—the ability to maintain coherence and progress across extended, multi-step coding projects. Crucially, this involved sophisticated context management, such as employing compaction techniques to keep vast code repositories semantically relevant within the model’s working memory, allowing for meaningful large-scale operations like comprehensive refactoring or cross-module migrations without catastrophic context loss.

Furthermore, the GPT-5.2 generation demonstrated enhanced reliability in tool invocation, a vital component for any modern AI agent that must interact with external APIs, compilers, or debuggers. A particularly noteworthy advancement was the reported optimization for Windows-based development workflows, addressing previous compatibility or performance gaps often encountered in cross-platform AI tooling. Perhaps most transformative was the significant enhancement in visual processing capabilities. This stronger "vision" allows the model to ingest and interpret visual data—screenshots of UI bugs, architectural diagrams, or flowcharts—and integrate that information directly into its code generation or debugging logic. This multimodal input capability transforms the assistant from a pure text processor into a more holistic development partner.

The introduction of the "Max" suffix, mirroring the structure seen previously with GPT-5.1-Codex-Max, suggests that GPT-5.2-Codex-Max is not merely an incremental update but a premium tier carved out for the most demanding users, likely those on the highest subscription levels or enterprise contracts. Historically, these "Max" versions have offered either significantly increased context windows, faster inference speeds, or superior accuracy on benchmark tasks deemed too sensitive or computationally intensive for the standard offering. If the trajectory holds, this new version promises a measurable jump in reliability and capability that justifies the premium classification.

Industry Implications: The Acceleration of AI Specialization

The immediate industry implication of a "Codex-Max" rollout is the formal acknowledgment by OpenAI of a bifurcated market for AI-assisted development. This segmentation speaks volumes about the evolving demands of professional software engineering teams. Standard Codex might suffice for scripting, documentation, or isolated function creation. However, high-stakes environments—such as regulated industries, large-scale infrastructure management, or complex legacy system modernization—require AI tools that approach near-perfect adherence to established patterns and maintain state over immense project scopes.

This move solidifies the trend toward AI specialization. General-purpose Large Language Models (LLMs) are becoming foundational, but profitability and practical utility are increasingly found in finely tuned derivatives. For a developer working on a critical microservice, the risk of an AI hallucinating a dependency or misinterpreting a complex architectural diagram is prohibitively high. A "Max" model, presumably trained on even cleaner, more curated, and perhaps proprietary code datasets, offers a reduced error margin that translates directly into reduced technical debt and faster time-to-market.

Moreover, this tiered rollout exerts pressure on competitors in the code generation space, such as Google’s AlphaCode derivatives or models offered by Anthropic and various open-source consortia. To compete effectively, rivals must not only match the general capabilities of GPT-5.2 but also counter the perceived performance ceiling established by the "Max" designation. This forces the entire sector to rapidly iterate on performance metrics related to code correctness, security vulnerability spotting, and architectural comprehension.

Expert-Level Analysis: Context, Compaction, and Agentic Fidelity

From a technical perspective, the focus on context handling within the GPT-5.2 framework is where the "Max" version is most likely to shine. In modern software engineering, the challenge is rarely generating a single correct line of code; it is understanding the interplay between thousands of files, dependency graphs, and business logic constraints spread across a massive codebase.

The concept of "compaction" mentioned in relation to GPT-5.2 is critical. It suggests sophisticated embedding and retrieval augmentation techniques that go beyond simple sliding-window context management. Instead of just summarizing context, compaction likely involves deep semantic pruning, ensuring that only the most relevant structural and functional information from the repository is actively maintained in the model’s active attention mechanism, allowing the model to "remember" the structure of an entire application while actively working on a small function within it.

A "Max" iteration could push this further by:

- Exponentially Larger Effective Context: While physical token limits remain a constraint, the "Max" version might employ more efficient state compression algorithms, effectively managing the contextual awareness of a codebase five or ten times larger than the standard 5.2 model.

- Advanced State Tracking for Refactoring: Refactoring is a process of managing localized changes that have global implications. If GPT-5.2-Codex-Max exhibits superior fidelity during these operations, it implies a breakthrough in causal reasoning within the codebase—the model doesn’t just see the code; it understands the consequences of modifying it.

- Optimized Tool Chaining: Enhanced reliability in tool use suggests better decision trees for agentic workflows. For instance, when debugging a complex, intermittent bug, the Max model might be more adept at autonomously deciding to run a specific set of unit tests, analyzing the output logs, searching documentation, and then applying a fix, all without requiring manual intervention at each step.

The improved Windows workflow support is also significant, indicating a targeted optimization effort beyond standard Linux/Unix environments often prioritized in foundational model training. This caters directly to enterprise environments where Windows Server infrastructure, PowerShell scripting, and .NET ecosystems remain dominant.

Future Impact and Trends: The Rise of AI Architecture Reviewers

The trajectory culminating in GPT-5.2-Codex-Max points toward a future where AI assistants evolve from mere coding partners to genuine collaborators capable of operating at the architectural level.

Currently, AI tools excel at the implementation layer. The next frontier, heavily suggested by the features emphasized in the 5.2 release (long-task tracking, migration handling, vision for diagrams), is the design and maintenance layer. We are moving towards systems that can genuinely serve as "AI Architecture Reviewers."

Imagine an engineer proposing a significant change to a core data service. In the near future, the "Max" model might not just write the new implementation but proactively flag security vulnerabilities based on the system diagram provided, suggest alternative data partitioning schemes based on observed usage patterns derived from log analysis (if integrated), and generate the full migration script across multiple repositories simultaneously.

This development also carries profound implications for developer training and education. If the "Max" version handles complexity and context so effectively, junior developers might bypass crucial learning stages related to system-wide reasoning. Conversely, it empowers senior developers to focus exclusively on high-level strategic problems, delegating the error-prone, context-heavy grunt work of large-scale updates to the AI.

The ultimate long-term impact hinges on the accessibility and pricing structure of these "Max" variants. If they remain exclusive to the highest enterprise tiers, they risk exacerbating the productivity gap between large, well-funded organizations that can afford peak AI tooling and smaller entities relying on less capable, generalized models. If, however, this tiered rollout serves as a staging ground for a future, universally accessible feature set, it signals a rapid democratization of truly high-fidelity, large-scale code management capabilities. OpenAI’s careful segmentation strategy suggests they are gauging the market’s willingness to pay a premium for near-perfect AI engineering assistance before committing these resources more broadly. The coming weeks, and any official announcement following these user discoveries, will likely clarify the true scope of the "Max" promise.