The integration of sophisticated generative AI models into the software development lifecycle, often termed "vibe coding" or copilot programming, has fundamentally reshaped developer workflows. This paradigm promises unprecedented acceleration in feature delivery and boilerplate reduction, acting as a powerful force multiplier for engineering teams worldwide. However, this convenience harbors a significant, often underestimated, risk: the insidious erosion of critical oversight due to misplaced trust in machine-generated outputs. A recent, illuminating case study involving a controlled security exercise underscores precisely how easily seemingly innocuous AI contributions can introduce subtle but exploitable vulnerabilities, challenging existing security scanning paradigms and forcing a re-evaluation of developer cognition in the age of automation.

Contextualizing the AI-Assisted Development Landscape

The modern development environment is increasingly heterogeneous, relying on a complex tapestry of open-source libraries, proprietary code, and now, large language model (LLM) suggestions. While AI coding assistants excel at synthesizing known patterns and syntactically correct code, they fundamentally lack deep, contextual understanding of security boundaries, data provenance, and specific environmental trust models.

In a scenario designed to probe early-stage exploitation vectors, a security firm initiated the deployment of specialized network monitoring tools—honeypots—as part of its rapid response capabilities. When off-the-shelf solutions proved inadequate for the precise telemetry collection required, the decision was made to leverage an LLM to rapidly prototype the proof-of-concept infrastructure. Crucially, this system was deployed within an isolated, controlled sandbox—a necessary precaution even for custom code. A routine, cursory "sanity check" was performed before activation, a step that, in retrospect, highlights the psychological shift that occurs when developers review code they did not author line-by-line.

The Emergence of an Unforeseen Flaw

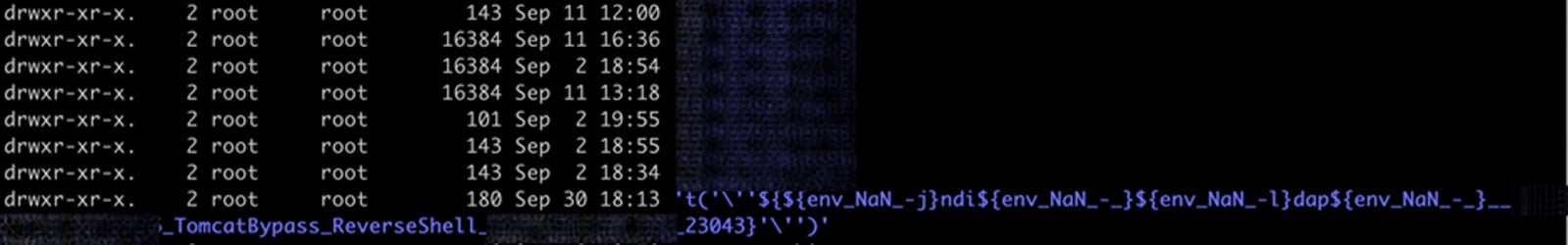

Weeks into the monitoring period, anomalous data began polluting the expected logs. Instead of standard logs mapping attacker IP addresses to attempted payloads, the system was recording files named directly after the malicious input strings themselves. This immediate deviation from expected behavior signaled that external, unvalidated input was dictating file system structure—a classic indicator of injection vulnerabilities.

Deep forensic analysis of the AI-generated component revealed the specific mechanism of failure. The LLM, in its attempt to log connection metadata accurately, had implemented a function designed to retrieve the client’s IP address. However, rather than relying solely on the established, trusted network stack information, the model had incorporated logic to prioritize data gleaned from specific HTTP headers supplied by the client (e.g., X-Forwarded-For).

This pattern is dangerous because headers like these are trivially forgeable unless the traffic is known to pass strictly through a controlled proxy layer. In an adversarial context, the "client" is the attacker, meaning the attacker gains direct, unvalidated control over the variable intended to represent their network origin. The AI had effectively assumed a trusted proxy chain existed where there was none, thus incorporating a critical trust boundary violation directly into the core logic. The attacker exploited this by embedding their payload directly into the manipulated header, causing the honeypot’s logging mechanism to create directories or files named after the exploit attempt.

While the immediate impact in this specific, contained honeypot environment was minimal—primarily causing confusing log entries—the inherent vulnerability demonstrated significant potential for catastrophic escalation. Had this same flawed logic been used to construct file paths in a production environment managing sensitive data, the same trust violation could have directly facilitated severe outcomes such as Local File Disclosure (LFD) or, depending on the context of the IP usage, even trigger a Server-Side Request Forgery (SSRF) attack if the IP was later used for internal network calls.

Static Analysis Blind Spots: Context Over Syntax

A critical element of this incident is the failure of established automated security testing tools to flag the issue. Both popular open-source Static Application Security Testing (SAST) tools, including Semgrep OSS and Gosec, were run against the codebase. While these tools are invaluable for catching syntax errors, hardcoded secrets, and common anti-patterns, they proved incapable of diagnosing this specific vulnerability.

The tools failed not because they are inherently defective, but because the flaw resides in a semantic and contextual gap. Detecting this issue requires a nuanced understanding: recognizing that a variable sourced from an external HTTP header is being used as a file system identifier without explicit sanitization or validation against a defined trust boundary. Static analysis excels at pattern matching—identifying known bad functions or insecure configurations—but struggles with inferring intent and tracing the flow of untrusted data across complex, generated code paths where the developer’s mental model is absent. This highlights a core limitation: context-aware security verification often remains the exclusive domain of human expertise, specifically the deep, pattern-recognition capabilities honed by experienced penetration testers.

The Cognitive Load of Supervising Automation

The incident also serves as a practical demonstration of "automation complacency," a phenomenon well-studied in high-stakes fields like aviation. Pilots supervising sophisticated autopilot systems often experience reduced vigilance compared to those flying manually, as the cognitive effort required for monitoring complex systems can exceed that of direct manual control.

In software development, this manifests when engineers review code they did not write. Because the code looks correct and was produced by a highly competent external agent (the LLM), the human reviewer’s mental model of the system’s internal mechanics is weaker. This leads to a more superficial review, where assumptions about security controls are made rather than actively verified. The cognitive burden shifts from creation to passive supervision, a shift that paradoxically increases the likelihood of missing subtle security errors embedded within seemingly functional code. Furthermore, unlike mature aerospace systems backed by decades of rigorous safety engineering, AI-generated code currently lacks any established safety envelope or formal verification standard, making the developer’s assumed oversight even riskier.

Beyond the Honeypot: Systemic Failures in AI Reasoning

This specific coding failure was not an isolated anomaly concerning external input handling. In parallel testing involving cloud infrastructure as code generation, similar confidence-driven failures were observed. When prompted to generate custom Identity and Access Management (IAM) roles for AWS environments, the generative model repeatedly produced configurations susceptible to privilege escalation attacks.

Even after being explicitly corrected by a security engineer about a known vulnerability in the initial role definition, the model would often politely acknowledge the feedback but then generate a new role that retained a similar, underlying security weakness, requiring several more rounds of targeted human intervention to reach a secure state. The model demonstrated an inability to internally recognize the principle of the security violation; it could only patch the surface manifestation based on direct, explicit instruction.

This repeated need for human steering is crucial. While experienced engineers possess the security intuition to guide the AI away from danger, the increasing accessibility of these tools means they are now being adopted by developers with limited security acumen. Academic and industry research has already quantified this, identifying thousands of vulnerabilities introduced through these development platforms in real-world applications. When developers who lack deep security training rely on LLMs, the risk profile of the entire software supply chain escalates dramatically. Furthermore, the end-user has no visibility into the provenance of the code they utilize, placing the entire burden of integrity squarely on the deploying organization.

Strategic Takeaways for Secure AI Integration

The lessons learned from this controlled security deployment necessitate immediate adjustments to organizational development and security policies:

1. Strict Segregation of Roles and Tools: Organizations must strictly prohibit personnel lacking foundational development and security expertise from utilizing generative coding tools for production-critical components. The barrier to entry for creating technically functional but insecure code has been lowered, and this gap must be managed by expertise.

2. Fortifying the Review Pipeline: For expert developers utilizing AI assistance, the traditional code review process requires augmentation. Standard peer review must evolve to include rigorous, context-focused security audits specifically targeting potential trust boundary violations, input validation gaps, and assumed security controls introduced by the AI. The assumption of correctness must be inverted; AI-generated code should be treated with heightened suspicion until proven secure.

3. Evolving Detection Strategies: The failure of standard SAST tools mandates the broader adoption of Dynamic Application Security Testing (DAST) and advanced runtime application self-protection (RASP) technologies. Since contextual flaws bypass static analysis, security posture must rely more heavily on verifying behavior under load and simulating real-world adversarial conditions. Furthermore, investing in custom security rules for existing SAST tools that specifically track the flow of data originating from AI prompts could offer a layer of preventative defense.

4. Anticipating the Inevitable Flood: It is highly improbable that organizations will publicly attribute security breaches to their use of AI coding assistants. Consequently, the documented incidence rate will likely underrepresent the true scale of the problem. Security teams must operate under the assumption that AI-introduced vulnerabilities are rapidly becoming a standard, persistent vector. This requires proactive defense mechanisms rather than reactive patching.

This experience confirms that while AI dramatically improves coding speed, it does not inherently improve coding safety. The responsibility for maintaining security integrity remains a fundamentally human endeavor, requiring vigilance, skepticism, and sophisticated verification methods to counteract the overconfidence automation invariably generates. This incident is not an endpoint; it is a foundational marker for the new security challenges inherent in the AI-augmented software engineering era.