The paradigm shift in artificial intelligence deployment, moving critical inference workloads from centralized cloud infrastructure to the localized computing edge, is rapidly reshaping the semiconductor landscape and generating significant financial upside for key enabling technologies. At the forefront of this decentralization is Quadric, a chip Intellectual Property (IP) startup that has successfully translated this market imperative into explosive revenue growth and a sharply increased valuation, signaling investor confidence in the future of distributed AI compute.

Founded by veterans who honed their skills in the high-stakes, efficiency-driven environment of early Bitcoin mining at 21E6, Quadric initially focused its efforts on complex, real-time edge processing needs within the automotive sector. However, the subsequent explosion of transformer-based models beginning in 2023 dramatically broadened the scope of inference applications, pushing the requirement for local AI processing into virtually every consumer and industrial device. This market inflection has driven a rapid business expansion for the company over the past eighteen months.

The financial metrics underscore this trajectory. Quadric reported licensing revenue between $15 million and $20 million in 2025, a substantial leap from approximately $4 million recorded in 2024. The firm, headquartered in San Francisco with a crucial development base in Pune, India, is targeting further aggressive growth, projecting up to $35 million in licensing revenue for the current fiscal year as it pivots toward a long-term, royalty-driven business model predicated on mass-market adoption. This performance has concurrently propelled the company’s post-money valuation to a range of $270 million to $300 million, marking a nearly threefold increase from its $100 million valuation established during its Series B round in 2022.

This financial momentum recently culminated in a successful $30 million Series C funding round, spearheaded by ACCELERATE Fund, managed by BEENEXT Capital Management. This infusion brings Quadric’s total capital raised to $72 million. The investment is strategically timed, coinciding with a widespread recognition among global technology players and investors that the economic and operational constraints of centralized cloud AI necessitate a vigorous push toward on-device and local server processing solutions.

The Economics and Geopolitics of Decentralization

The movement of AI inference from the cloud to the device is not merely a technical preference; it is a critical response to escalating economic and geopolitical pressures. Centralized cloud computing, while ideal for the computationally intensive task of AI model training, becomes an extremely expensive proposition when handling billions of daily inference queries across a global user base. Each query necessitates bandwidth, processing time, and energy consumption, leading to high operational expenditures (OPEX) for businesses.

Furthermore, relying solely on hyperscale data centers—many of which are concentrated geographically and controlled by a handful of global providers—introduces unacceptable latency for mission-critical applications such as autonomous driving, factory automation, and real-time medical imaging. These systems require decisions to be made in milliseconds, something cloud connectivity cannot reliably guarantee.

This operational urgency converges with an emerging global policy trend: the push for "Sovereign AI." Policymakers and industry groups, particularly in rapidly developing economies, are prioritizing the establishment of domestic AI capabilities spanning compute infrastructure, model development, and data stewardship, thereby mitigating reliance on foreign, often U.S.-based, infrastructure.

The World Economic Forum has noted this shift toward distributed AI architectures, where inference compute moves closer to the end-user. Similarly, analyses by firms like EY highlight the traction gained by the sovereign AI approach, driven by the difficulty and expense associated with constructing and operating hyperscale data centers in many regions. Distributed AI, running on localized infrastructure like smart laptops or small on-premise servers, offers a pragmatic and cost-effective alternative to relying on distant cloud services for every single operation.

Quadric is actively capitalizing on this geopolitical demand, strategically exploring opportunities in markets such as India and Malaysia. The startup’s decision to base a significant development office in Pune, India, and its collaboration with strategic investors like Moglix CEO Rahul Garg to refine its sovereign approach, underscore its commitment to serving these burgeoning, locally-focused markets.

Programmable IP: The Competitive Differentiator

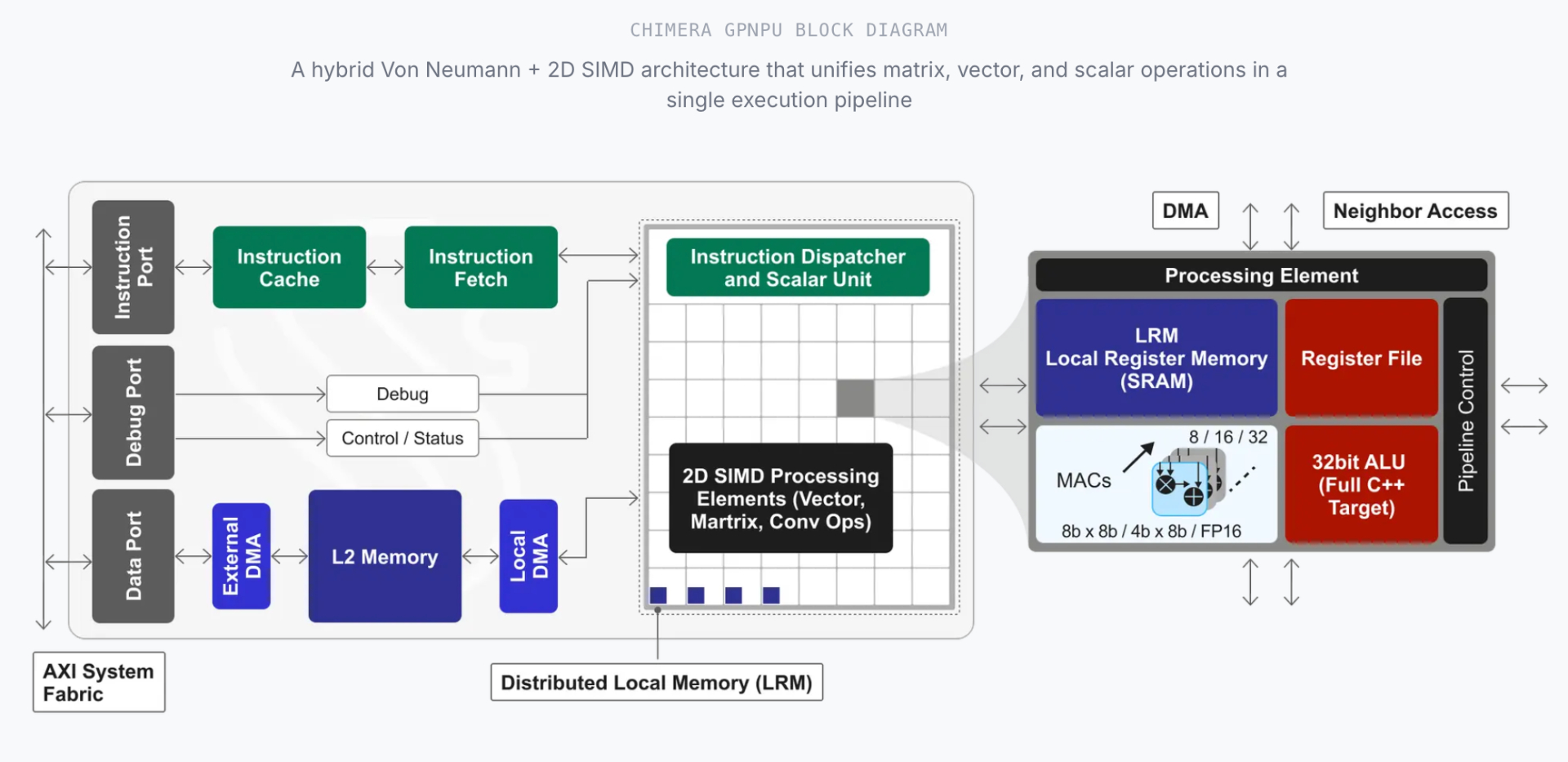

Quadric’s core offering is not silicon fabrication but rather the licensing of highly programmable AI processor IP—a digital blueprint—which customers integrate directly into their custom chips. This IP is coupled with a complete software stack and toolchain designed to efficiently execute diverse AI models, including advanced vision and voice applications, directly on the device.

CEO Veerbhan Kheterpal draws a compelling parallel, aiming to establish an infrastructure for on-device AI that mirrors the ubiquity and power of Nvidia’s CUDA platform in the data center. However, the critical distinction lies in the business model and the architecture’s flexibility.

The semiconductor industry faces a fundamental dilemma: AI model architectures are evolving at a breakneck pace, sometimes shifting fundamentally in a matter of months, while the hardware design cycle—from concept to mass production—often takes years. A fixed-function hardware accelerator designed for today’s models risks becoming obsolete before the chip even reaches market volume.

This is where Quadric’s programmable approach delivers a decisive advantage. Unlike integrated chip vendors such as Qualcomm, whose AI technology is tightly bound to their own proprietary processors, or traditional IP providers like Synopsys and Cadence, which typically offer fixed neural processing engine blocks that are notoriously difficult for customers to program and update, Quadric emphasizes software flexibility.

By licensing a programmable processor IP, customers gain the ability to adapt to new, unforeseen AI models—such as the rapid transition from earlier convolutional neural networks (CNNs) focused on vision to today’s resource-intensive transformer architectures—through simple software and firmware updates, rather than requiring expensive, time-consuming hardware redesigns. This programmability mitigates the inherent risk of hardware obsolescence in a hyper-dynamic AI ecosystem.

Scaling Beyond the Edge: From Vehicles to VDI

Quadric’s initial focus on the automotive sector provided a rigorous proving ground. Applications like advanced driver assistance systems (ADAS) demand extreme efficiency and deterministic, low-latency performance—qualities that translate exceptionally well across other demanding industrial and consumer verticals.

The company’s current customer base reflects this diversification, encompassing industrial printers (Kyocera), high-end automotive suppliers (Japan’s Denso, which produces chips for Toyota vehicles), and the nascent market for specialized AI laptops. The commercialization timeline is tight, with the first products incorporating Quadric’s technology expected to ship this year, starting with the consumer AI laptop segment.

The expansion into the AI PC market is particularly significant. As major operating systems and application developers integrate generative AI functionalities, the demand for dedicated, efficient Neural Processing Units (NPUs) or flexible AI accelerators embedded directly into the laptop CPU/SoC becomes paramount. Quadric is positioning its IP to capture this foundational layer of compute for the next generation of personal devices.

Industry Implications and Future Outlook

The rise of flexible AI IP vendors like Quadric heralds a transformative shift in the semiconductor supply chain. Historically, chip design relied on stable, long-term architectural standards. The volatility introduced by modern generative AI models necessitates a paradigm shift toward software-defined silicon.

For incumbent IP vendors, Quadric’s success poses a direct challenge to fixed-function acceleration blocks. The market is increasingly rejecting hardwired solutions that cannot adapt to the next model iteration. This trend suggests that future competitive advantage in AI IP will reside not just in raw computational throughput, but in the completeness of the software toolchain and the ease with which developers can map new, complex models onto the underlying hardware.

For major chip manufacturers, the availability of specialized, programmable third-party AI IP provides a strategic option: rather than dedicating massive internal resources to constantly redesign proprietary AI engines, they can license proven, flexible IP, allowing them to focus their resources on core CPU/GPU development and fabrication.

However, despite its strong financial growth and strategic positioning, Quadric remains in the early stages of its long-term buildout. The IP licensing business model inherently relies on two phases of revenue generation: initial licensing deals (the $15M-$20M figures) that fund operations and prove design wins, followed by the crucial transition to high-volume, recurring royalties derived from shipped units.

The long-term upside for the company is entirely dependent on its handful of signed customers—including significant players like Denso—successfully transitioning their licensed blueprints into millions of physical chips embedded in cars, printers, and laptops. This conversion of design wins into mass-market deployment is the defining execution challenge facing Quadric in the coming years.

If the market continues its rapid decentralization, driven by the twin forces of cloud cost mitigation and the imperative for sovereign technological capabilities, Quadric’s programmable IP model is uniquely positioned to become a foundational layer of global distributed AI compute. By providing a customizable, software-updatable "blueprint" for AI silicon, the company is not just riding the market shift—it is actively supplying the infrastructure for the next generation of intelligent devices, setting the stage for a high-stakes competition for control of the AI edge.